Running WRF-SFIRE with real data in the WRFx system

- Back to the WRF-SFIRE user guide.

Instructions to set up the whole WRFx system right now using the last version of all the components with a couple of working examples. WRFx consists of a Fortran coupled atmosphere-fire model WRF-SFIRE, a python automatic HPC system wrfxpy, a visualization web interface wrfxweb, and a simulation web interface wrfxctrl.

WRF-SFIRE model

A coupled weather-fire forecasting model built on top of Weather Research and Forecasting (WRF).

WRF-SFIRE: Requirements and environment

On many systems, the required libraries are in environment modules, which you can use by something like:

module load netcdf

Install required libraries

- General requirements:

- C-shell

- Traditional UNIX utilities: zip, tar, make, etc.

- WRF-SFIRE requirements:

- Fortran and C compilers (Intel recomended)

- MPI (compiled using the same compiler, usually comes with the system)

- NetCDF libraries (compiled using the same compiler)

- WPS requirements:

- zlib compression library (zlib)

- PNG reference library (libpng)

- JasPer compression library

- libtiff and geotiff libraries

See https://www2.mmm.ucar.edu/wrf/OnLineTutorial/compilation_tutorial.php for the required versions of the libraries.

Set WRF environment

Set specific libraries installed

setenv NETCDF /path/to/netcdf setenv HD5 /path/to/hdf5 setenv JASPERLIB /path/to/jasper/lib setenv JASPERINC /path/to/jasper/include setenv LIBTIFF /path/to/libtiff setenv GEOTIFF /path/to/libtiff setenv WRFIO_NCD_LARGE_FILE_SUPPORT 1

HD5 is optional, but without it, configure have you use uncompressed netcdf files.

Should your executables fail on unresolved libraries, also add all the library folders into your LD_LIBRARY_PATH:

setenv LD_LIBRARY_PATH /path/to/netcdf/lib:/path/to/jasper/lib:/path/to/libtiff/lib:/path/to/geotiff/lib:$LD_LIBRARY_PATH

WRF expects $NETCDF and $HD5 to have subdirectories include, lib, and modules.

To use system-installed netcdf and hdf5,

on a system that uses standard /usr/lib (such as Ubuntu), you may be able to use simply

setenv NETCDF /usr setenv HD5 /usr

On a Linux that uses /usr/lib64 (such as Redhat and Centos), make a directory with the links

include -> /usr/include lib -> /usr/lib64 modules -> /usr/lib64/gfortran/modules

and point NETCDF and HD5 to it.

WRF-SFIRE: Installation

Clone github repositories

Clone WRF-SFIRE and WPS github repositories

git clone https://github.com/openwfm/WRF-SFIRE git clone https://github.com/openwfm/WPS

Configure CHEM (optional)

setenv WRF_CHEM 1

Configure WRF-SFIRE

cd WRF-SFIRE ./configure

Options 15 (INTEL ifort/icc dmpar) and 1 (simple nesting) if available.

Compile WRF-SFIRE

Compile em_fire

./compile em_fire >& compile_em_fire.log & grep Error compile_em_fire.log

To be able to run real problems, compile em_real

./compile em_real >& compile_em_real.log & grep Error compile_em_real.log

If any of the previous step fails:

./clean -a ./configure

Add to configure.wrf -nostdinc at the end of the CPP flag, and repeat compilation. If this does not solve compilation, look for issues in your environment.

Configure WPS

cd ../WPS export WRF_DIR=/path/to/WRF-SFIRE ./configure

Option 17 (Intel compiler (serial)) if available

Compile WPS

./compile >& compile_wps.log & grep Error compile_wps.log

and

ls -l *.exe

should contain geogrid.exe, metgrid.exe, and ungrib.exe. If not

./clean -a ./configure

Add to configure.wps -nostdinc at the end of CPP flag, and repeat compilation. If this does not solve compilation, look for issues in your environment.

Get WPS static data

Get tar file with the static data and untar it. Keep in mind that this is the file that contains land use, elevation, soil type data, etc for WPS (geogrid.exe to be specific).

cd .. wget https://demo.openwfm.org/web/wrfx/WPS_GEOG.tbz tar xvfj WPS_GEOG.tbz

WRFx system

WRFx: Requirements and environment

Install Anaconda distribution

Download and install the Python 3 conda distribution for your platform. We recommend an installation into the users' home directory. For example,

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh chmod +x Miniconda3-latest-Linux-x86_64.sh ./Miniconda3-latest-Linux-x86_64.sh

The installation may instruct you to exit and log in again.

On a shared system, you may have a system-wide Python distribution with conda already installed, perhaps as a module, try module avail.

Install necessary packages

The currently preferred process is to have conda find the versions for you:

conda create -n wrfx python=3.8 conda activate wrfx conda install -c conda-forge gdal conda install -c conda-forge netcdf4 h5py pyhdf pygrib f90nml lxml simplekml pytz pandas scipy conda install -c conda-forge basemap paramiko dill psutil flask wgrib2 pip install MesoPy python-cmr shapely==2

On Linux x86-64, you can also use versions specified in a yml file:

wget https://demo.openwfm.org/web/wrfx/wrfx.yml conda env create --name wrfx --file wrfx.yml

Note: The versions listed in the yml file may not be available on platforms other than Linux x86-64 (most common), or may just not work as package repositories evolve.

Set environment

Every time before using WRFx, make the packages available by

conda activate wrfx

WRFx: wrfxpy

WRF-SFIRE forecasting and data assimilation in python using an HPC environment.

wrfxpy: Installation

Clone github repository

Clone wrfxpy repository

git clone https://github.com/openwfm/wrfxpy

Change to the directory where the wrfxpy repository has been created

cd wrfxpy

General configuration

An etc/conf.json file must be created with the keys discussed below. A template file etc/conf.json.initial is provided as a starting point.

cd wrfxpy cp etc/conf.json.initial etc/conf.json

Configure the queuing system, system directories, WPS/WRF-SFIRE locations, and workspace locations by editing the following keys in etc/conf.json:

"qsys": "key from clusters.json", "wps_install_path": "/path/to/WPS", "wrf_install_path": "/path/to/WRF", "sys_install_path": "/path/to/wrfxpy" "wps_geog_path" : "/path/to/WPS_GEOG", "wget" : /path/to/wget"

Note that all these paths are created from previous steps of this wiki except the wget path, which needs to be specified to use a preferred version. To find the default wget,

which wget

Cluster configuration

Next, wrfxpy needs to know how jobs are submitted on your cluster. Create an entry for your cluster in etc/clusters.json, here we use speedy as an example:

{

"speedy" : {

"qsub_cmd" : "qsub",

"qdel_cmd" : "qdel",

"qstat_cmd" : "qstat",

"qstat_arg" : "",

"qsub_delimiter" : ".",

"qsub_job_num_index" : 0,

"qsub_script" : "etc/qsub/speedy.sub"

}

}

And then the file etc/qsub/speedy.sub should contain a submission script template, that makes use of the following variables supplied by wrfxpy based on job configuration:

%(nodes)d the number of nodes requested %(ppn)d the number of processors per node requested %(wall_time_hrs)d the number of hours requested %(exec_path)d the path to the wrf.exe that should be executed %(cwd)d the job working directory %(task_id)d a task id that can be used to identify the job %(np)d the total number of processes requested, equals nodes x ppn

Note that not all keys need to be used, as shown in the speedy example:

#$ -S /bin/bash #$ -N %(task_id)s #$ -wd %(cwd)s #$ -l h_rt=%(wall_time_hrs)d:00:00 #$ -pe mpich %(np)d mpirun_rsh -np %(np)d -hostfile $TMPDIR/machines %(exec_path)s

The script template should be derived from a working submission script.

Note: wrfxpy has already configuration for colibri, gross, kingspeak, and cheyenne.

Tokens configuration

When running wrfxpy, sometimes the data needs to be accessed and downloaded using a specific token created for the user. For instance, in the case of running the Fuel Moisture Model, one needs a token from a valid MesoWest user to download data automatically. Also, when downloading satellite data, one needs a token for some Earthdata data centers. All of these can be specified with the creation of the file etc/tokens.json from the template etc/tokens.json.initial containing:

{

"mesowest" : "token-from-synopticdata.com",

"ladds" : "token-from-laads",

"nrt" : "token-from-lance"

}

So, if any of the previous capabilities are required, create a token from the specific page, do

cp etc/tokens.json.initial etc/tokens.json

and edit the file to include your previously created token.

For running the fuel moisture model, a new MesoWest user can be created in MesoWest New User. Then, the token can be acquired and replaced in the etc/tokens.json file. Also, the user can specify a list of tokens to use.

For acquiring satellite data, a new Earthdata user can be created in Earthdata New User. Then, the tokens from the respective data centers can be acquired and replaced in the etc/tokens.json file (LAADS and LANCE). There are some data centers that need to be accessed using the $HOME/.netrc file. Therefore, creating the $HOME/.netrc file is recommended as follows

machine urs.earthdata.nasa.gov login your_earthdata_id password your_earthdata_password

AWS acquisition

For getting GOES16 and GOES17 data and as an optional acquisition method for GRIB files, the system is using AWS Command Line Interface. So, you would need to have it installed. To look if you have already installed it, you can just type

aws help

If the command is not found, you can follow installation instructions from here. If you are using Linux, you can do:

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" unzip awscliv2.zip ./aws/install -i /path/to/lib -b /path/to/bin

Get fire static data

When running WRF-SFIRE simulations, one needs to use high-resolution elevation and fuel category data. If you have a GeoTIFF file for elevation and fuel, you can specify the location of these files using etc/vtables/geo_vars.json. So, you can do

cp etc/vtables/geo_vars.json.initial etc/vtables/geo_vars.json

and add the absolute path to your GeoTIFF files. The routine is going to automatically process these files and convert them into geogrid files to fit WPS. If you need to map the categories from the GeoTIFF files to the 13 Rothermel categories, you can modify the dictionary _var_wisdom on file src/geo/var_wisdom.py to specify the mapping. By default, the categories form the LANDFIRE dataset are going to be mapped according to 13 Rothermel categories. You can also specify what categories you want to interpolate using nearest neighbors. Therefore, the ones that you cannot map to 13 Rothermel categories. Finally, you can specify what categories should be no burnable using category 14.

To get GeoTIFF files from CONUS, you can use the LANDFIRE dataset following the steps on How_to_run_WRF-SFIRE_with_real_data#Obtaining_data_for_geogrid. Or you can just use the GeoTIFF files included in the static dataset WPS_GEOG/fuel_cat_fire and WPS_GEOG/topo_fire specifying in etc/vtables/geo_vars.json

{

"NFUEL_CAT": "/path/to/WPS_GEOG/fuel_cat_fire/lf_data.tif",

"ZSF": "/path/to/WPS_GEOG/topo_fire/ned_data.tif"

}

For running fuel moisture model, terrain static data is needed. This is a separate file from the static data downloaded for WRF. To get the static data for the fuel moisture model, go to wrfxpy and do:

wget https://home.chpc.utah.edu/~u6015636/static.tbz tar xvfj static.tbz

this will untar a static folder with the static terrain on it.

This dataset is needed for the fuel moisture data assimilation system. The fuel moisture model run as a part of WRF-SFIRE doesn't need this dataset and uses data processed by WPS.

Running wrfxpy

Before running anything, do

conda activate wrfx

At this point, you should be able to run wrfxpy on simple examples.

Sample runs

Several preconfigured input files are available in the tests directory. For example,

./forecast.sh tests/input-NAM218.json

will run a sample WRF-SFIRE forecast in the present using the NAM218 weather forecast product and stream the outputs to the visualization server.

You may wish to adapt some parameters as needed.

Download data only

You can use wrfxpy to only download data. The specification of the grid in the input json file will serve as a bounding box for data selection and visualization. For example,

./forecast.sh tests/california_sat_only.json

The downloaded data will be in the ingest directory.

For downloading data only, you do not need to install WRF-SFIRE or WPS, or run a job in the queueing system, or set the corresponding etc/conf.json keys.

Simple forecast

A simple example:

./simple_forecast.sh

Press enter at all the steps to set everything to the default values until the queuing system, then we select the cluster we configure (speedy in the example).

This will generate a job under jobs/experiment.json (or the name of the experiment that we chose).

Then, we can run our first forecast by

./forecast.sh jobs/experiment.json >& logs/experiment.log &

This will generate the experiment in the path specified in the etc/conf.json file and under a workspace subdirectory created from the experiment name, submit the job to your batch scheduler, and postprocess results and send them to your installation of wrfxweb. If you do not have wrfxweb, no worries, you can always get the files of the WRF-SFIRE run in subdirectory wrf of your experiment directotry. You can also inspect the files generated, modify them, and resubmit the job.

Fuel moisture model

If tokens.json is set, "mesowest" token is provided, and static data is gotten, you can run

./rtma_cycler.sh anything >& logs/rtma_cycler.log &

which will download all the necessary weather stations and estimate the fuel moisture model in the whole continental US.

wrfxpy: Possible errors

real.exe fails

Depending on the cluster, wrfxpy could fail when tries to execute ./real.exe. This happens on systems that do not allow executing MPI binary from the command line. We do not run real.exe by mpirun because mpirun on head node may not be allowed. Then, one needs to provide an installation of WRF-SFIRE in serial mode in order to run a serial real.exe. In that case, we want to repeat the previous steps but using the serial version of WRF-SFIRE:

cd .. git clone https://github.com/openwfm/WRF-SFIRE WRF-SFIRE-serial cd WRF-SFIRE-serial ./configure

Options 13 (INTEL ifort/icc serial) and 0 (no nesting)

./compile em_real >& compile_em_real.log & grep Error compile_em_real.log

Again, if any of the previous step fails:

./clean -a ./configure

Add -nostdinc in CPP flag, and repeat compilation. If this does not solve compilation, look for issues in your environment.

Note: This time, we only need to compile em_real because we only need real.exe. However, if you want to test serial vs parallel for any reason, you can proceed to compile em_fire the same way.

Then, we need to add this path in etc/conf.json file in wrfxpy, so

cd ../wrfxpy

and add to etc/conf.json file key

"wrf_serial_install_path": "/path/to/WRF/serial"

This should solve the problem, if not check log files from previous compilations.

WRFx: wrfxweb

wrfxweb is a web-based visualization system for imagery generated by wrfxpy.

wrfxweb requirements

wrfxweb runs in a regular user account on a Linux server equipped with a web server. You need to be able to

- transfer files and execute remote commands on the machine by passwordless ssh with key authentication without a passkey

- access the directory wrfxweb/fdds in your account from the web

wrfxweb: server setup

You can set up your own server. We are using Ubuntu Linux with nginx web server, but other software should work too. Configuring the web server to use https is recommended. The resource requirements are modest, 2 cores and 4GB memory are more than sufficient. Simulations can be large, easily several GB each, so provision sufficient disk space.

We can provide a limited amount of resources on our demo server to collaborators. To use our server, first make an ssh key on the machine where you run wrfxpy:

Create ~/.ssh directory (if you have not one)

mkdir ~/.ssh cd ~/.ssh

Create an id_rsa key (if you have not one) doing

ssh-keygen

and following all the steps (you can select defaults, so always press enter).

Then send an email to Jan Mandel (jan.mandel@gmail.com) asking for the creation of an account in demo server providing:

- Purpose of your request (including information about you)

- User id you would like (user_id)

- Short user id you would like (short_user_id)

- Public key (~/.ssh/id_rsa.pub file previously created)

If your request is approved, you will be able to ssh to the demo server without any password.

wrfxweb: Installation

Clone github repository

Clone wrfxweb repository in the demo server

ssh user_id@demo.openwfm.org git clone https://github.com/openwfm/wrfxweb.git

Configuration

Change directory and copy template to create new etc/conf.json

cp wrfxweb/etc/conf.json.template wrfxweb/etc/conf.json

Configure the following keys in wrfxweb/etc/conf.json:

"url_root": "https://demo.openwfm.org/short_user_id" "organization": "Organization Name" "flags": ["Flag 1", "Flag 2", ...]

If no flags are required, one can specify an empty list or remove the key.

The administrator needs to configure the web server so that the URL

https://demo.openwfm.org/short_user_id

is served from

~/user_id/wrfxweb/fdds

Also, create a new simulations folder doing

mkdir wrfxweb/fdds/simulations

The next steps are going to be set in the desired installation of wrfxpy (generated in the previous section).

Configure the following keys in etc/conf.json in any wrfxpy installation

"shuttle_ssh_key": "/path/to/id_rsa" "shuttle_remote_user": "user_id" "shuttle_remote_host": "demo.openwfm.org" "shuttle_remote_root": "/path/to/remote/storage/directory" "shuttle_lock_path": "/tmp/short_user_id"

The "shuttle_remote_root" key is usually defined as "/home/user_id/wrfxweb/fdds/simulations". So, everything should be ready to send post-processing simulations into the visualization server.

wrfxweb: Testing

Simple forecast

Finally, one can repeat the previous simple forecast test but when simple forecast asks

Send variables to visualization server? [default=no]

you will answer yes.

Then, you should see your simulation post-processed time steps appearing in real-time on http://demo.openwfm.org under your short_user_id.

Fuel moisture model

The fuel moisture model test can be also run and a special visualization will appear on http://demo.openwfm.org under your short_user_id.

WRFx: wrfxctrl

A website that enables users to submit jobs to the wrfxpy framework for fire simulation.

wrfxctrl: Installation

Clone github repository

Clone wrfxctrl repository in your cluster

git clone https://github.com/openwfm/wrfxctrl.git

Configuration

Change directory and copy template to create new etc/conf.json

cd wrfxctrl cp etc/conf-template.json etc/conf.json

Configure following keys in etc/conf.json

"host" : "127.1.2.3", "port" : "5050", "root" : "/short_user_id/", "wrfxweb_url" : "http://demo.openwfm.org/short_user_id/", "wrfxpy_path" : "/path/to/wrfxpy", "jobs_path" : "/path/to/jobs", "logs_path" : "/path/to/logs", "sims_path" : "/path/to/sims"

Notes:

- Entries "host", "port", "root" are only examples but, for security reasons, you should choose different ones of your own and as random as possible.

- Entries "jobs_path", "logs_path", and "sims_path" are recommended to be removed. They default to wrfxctrl/jobs, wrfxctrl/logs, and wrfxctrl/simulations.

wrfxctrl: Testing

Running wrfxctrl

Activate conda environment and run wrfxctrl.py doing

conda activate wrfx python wrfxctrl.py

This will show a message similar to

Welcome page is http://127.1.2.3:5050/short_user_id/start * Serving Flask app "wrfxctrl" (lazy loading) * Environment: production WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead. * Debug mode: off INFO:werkzeug: * Running on http://127.1.2.3:5050/ (Press CTRL+C to quit)

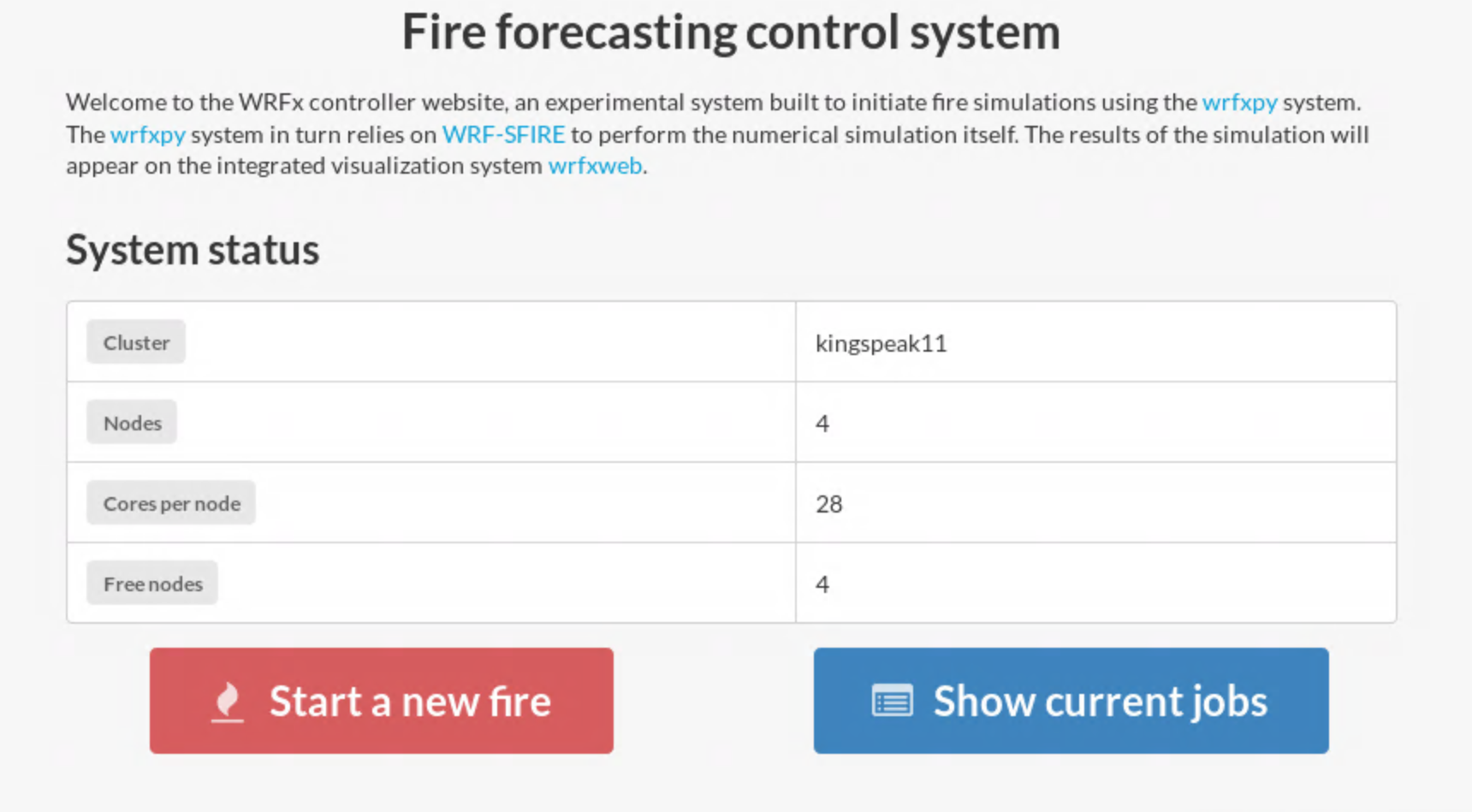

Starting page

Now you can go to your favorite internet browser and navigate to http://127.1.2.3:5050/short_user_id/start webpage. This will show you a screen similar than that

This starting page shows general information of the cluster and provides an option of starting a new fire using Start a new fire button and browsing the existent jobs using the Show current jobs button.

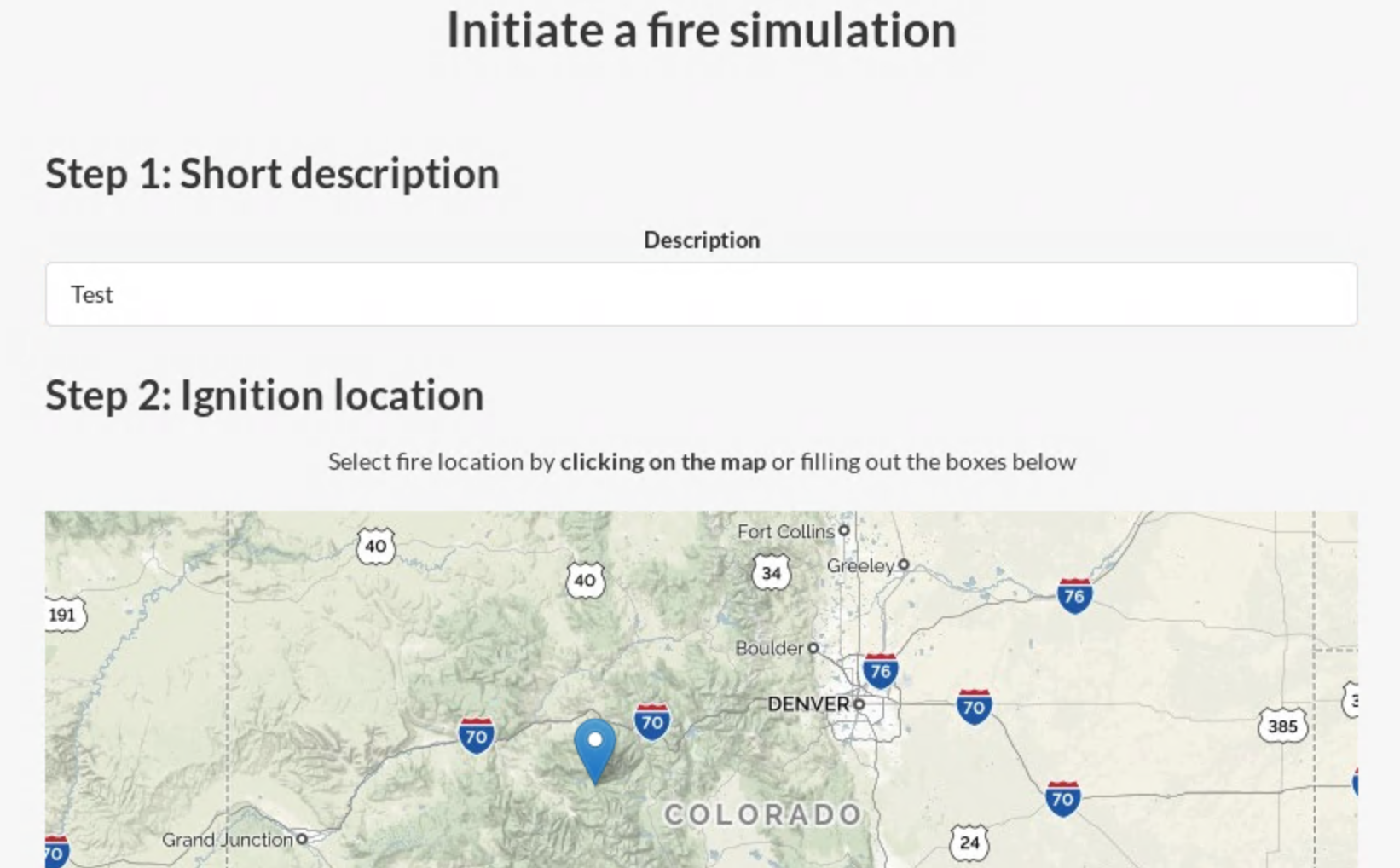

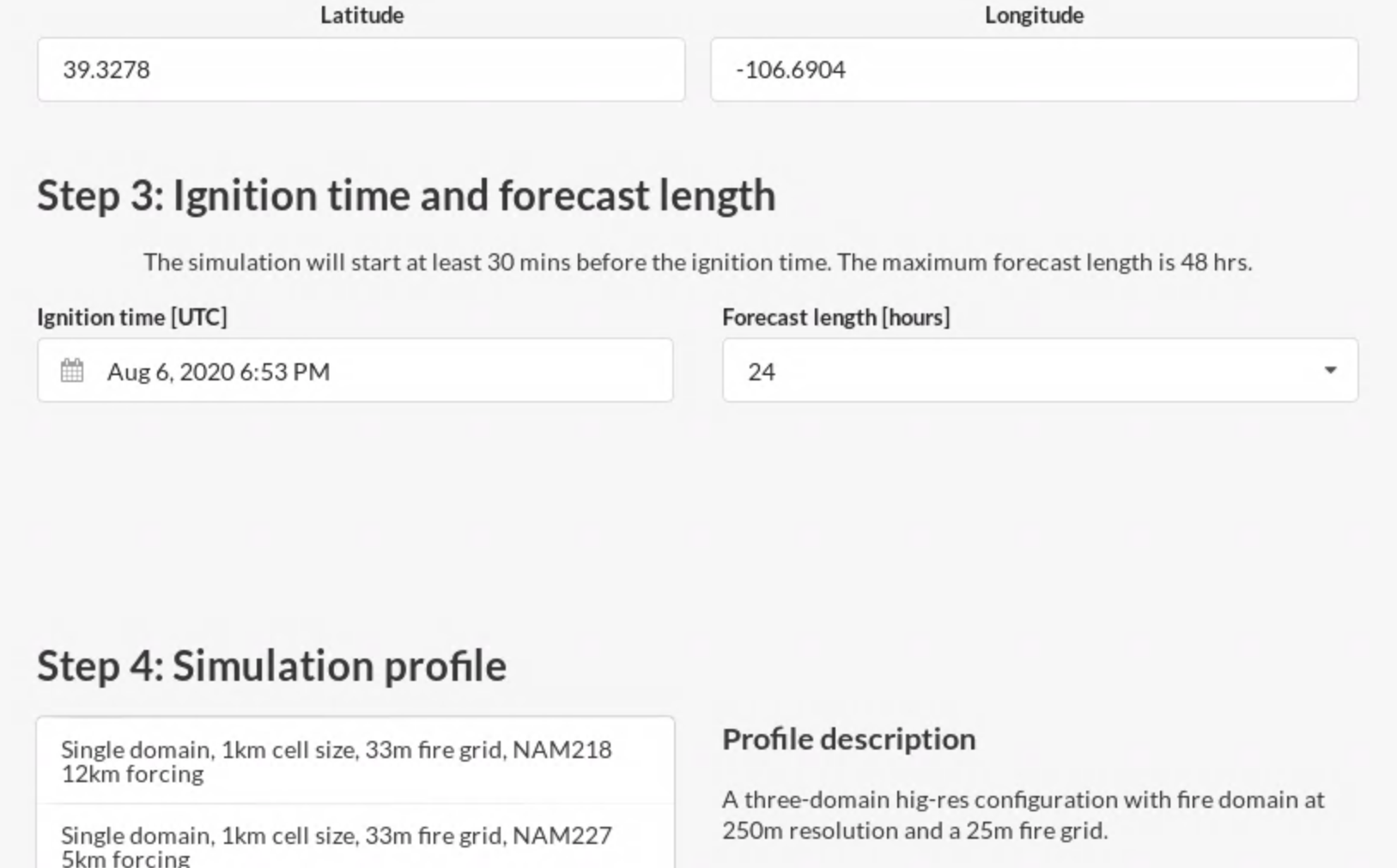

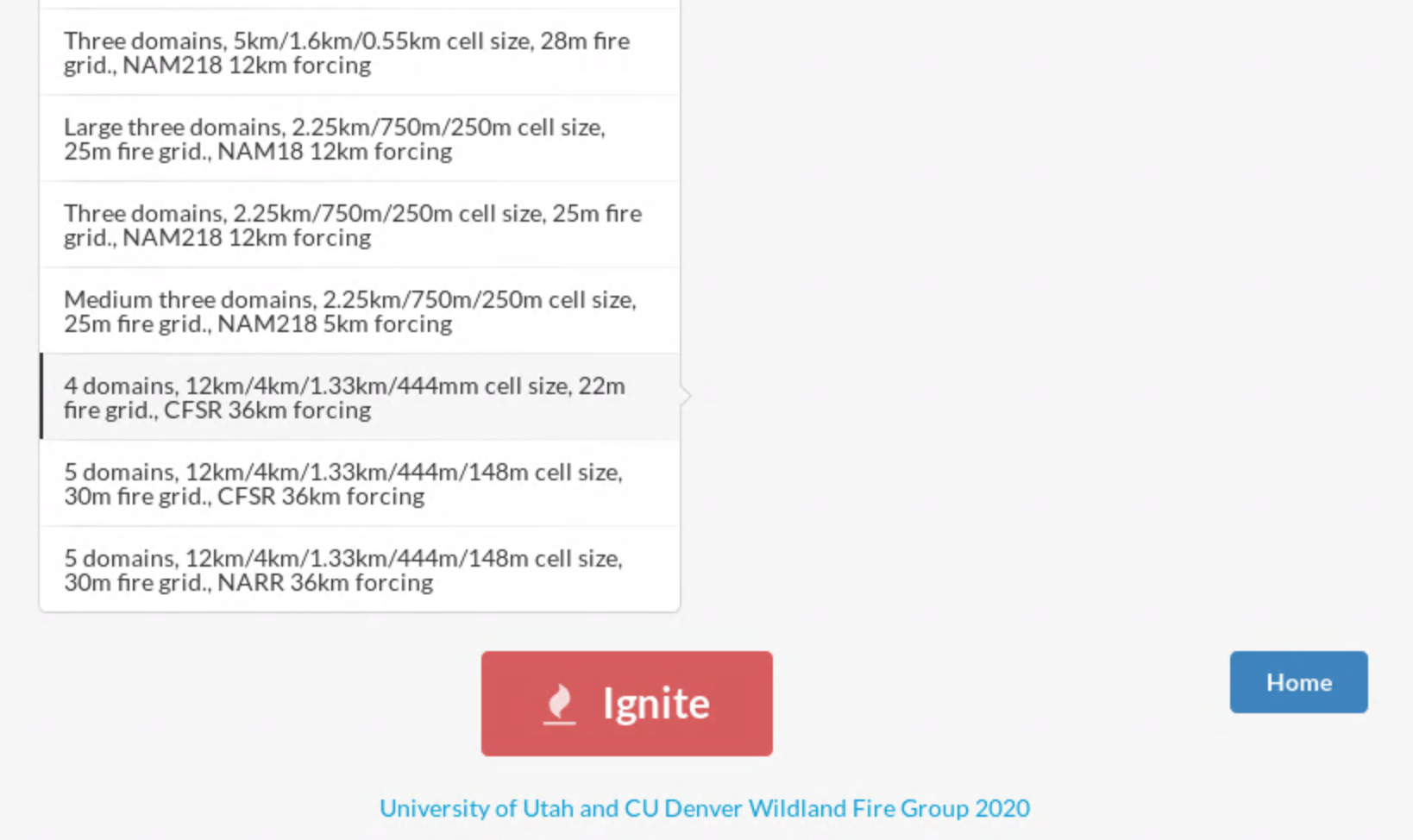

Submission page

From the previous page, if you select Start a new fire, you will be able to access the submission page. In this page, you can specify 1) a short description of the simulation, 2) the ignition location clicking in an interactive map or specifying the degree lat-lon coordinates, 3) the ignition time and the forecast length, 4) the simulation profile which defines the number of domains with their resolutions and sizes and the atmospheric boundary conditions data. Finally, once you have all the simulation options defined, you can scroll down to the end (next figure) and select the Ignite button. This will automatically show the monitor page where you will be able to track the progress of the simulation. See the image below to see an example of a simulation submission.

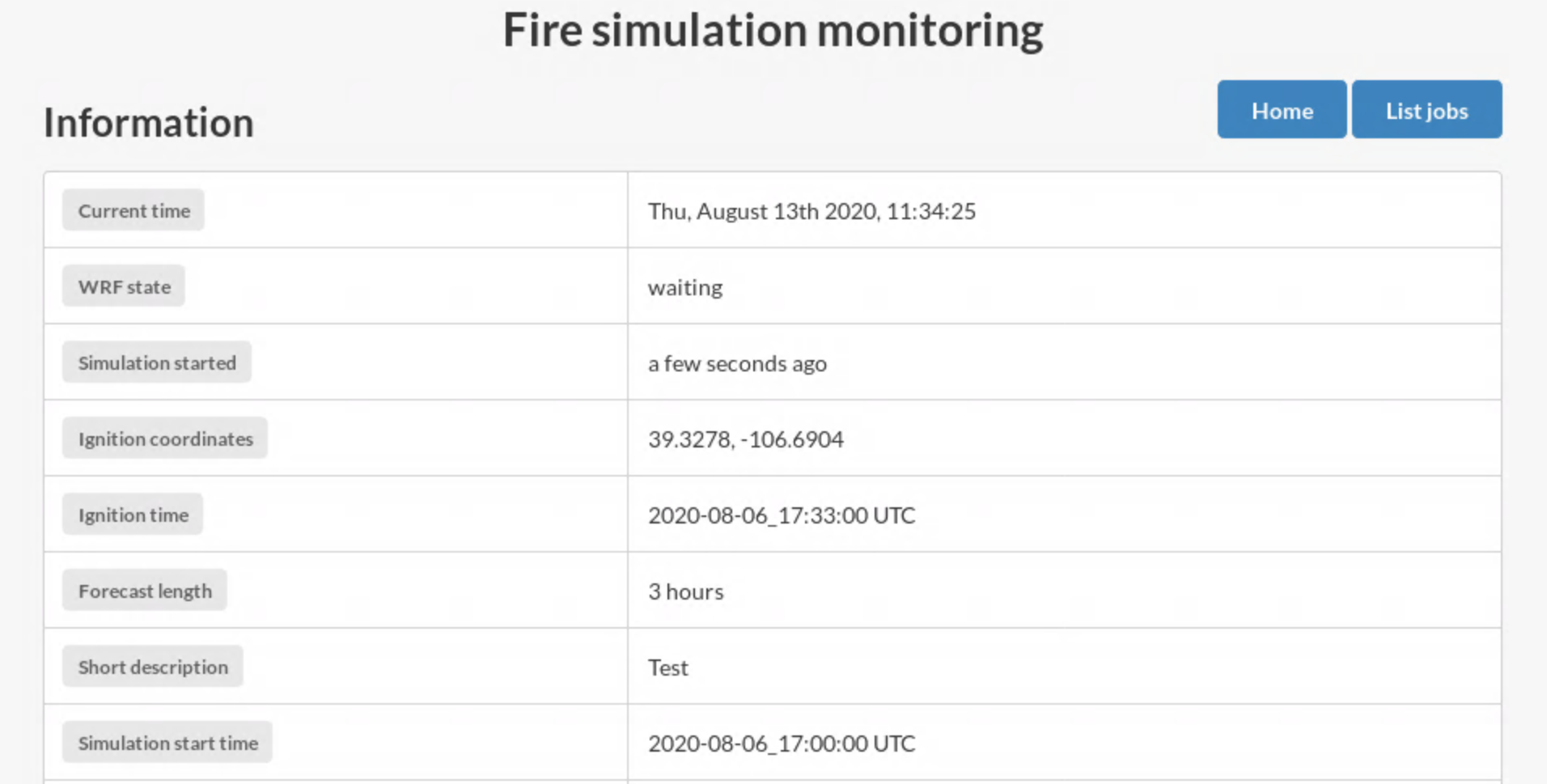

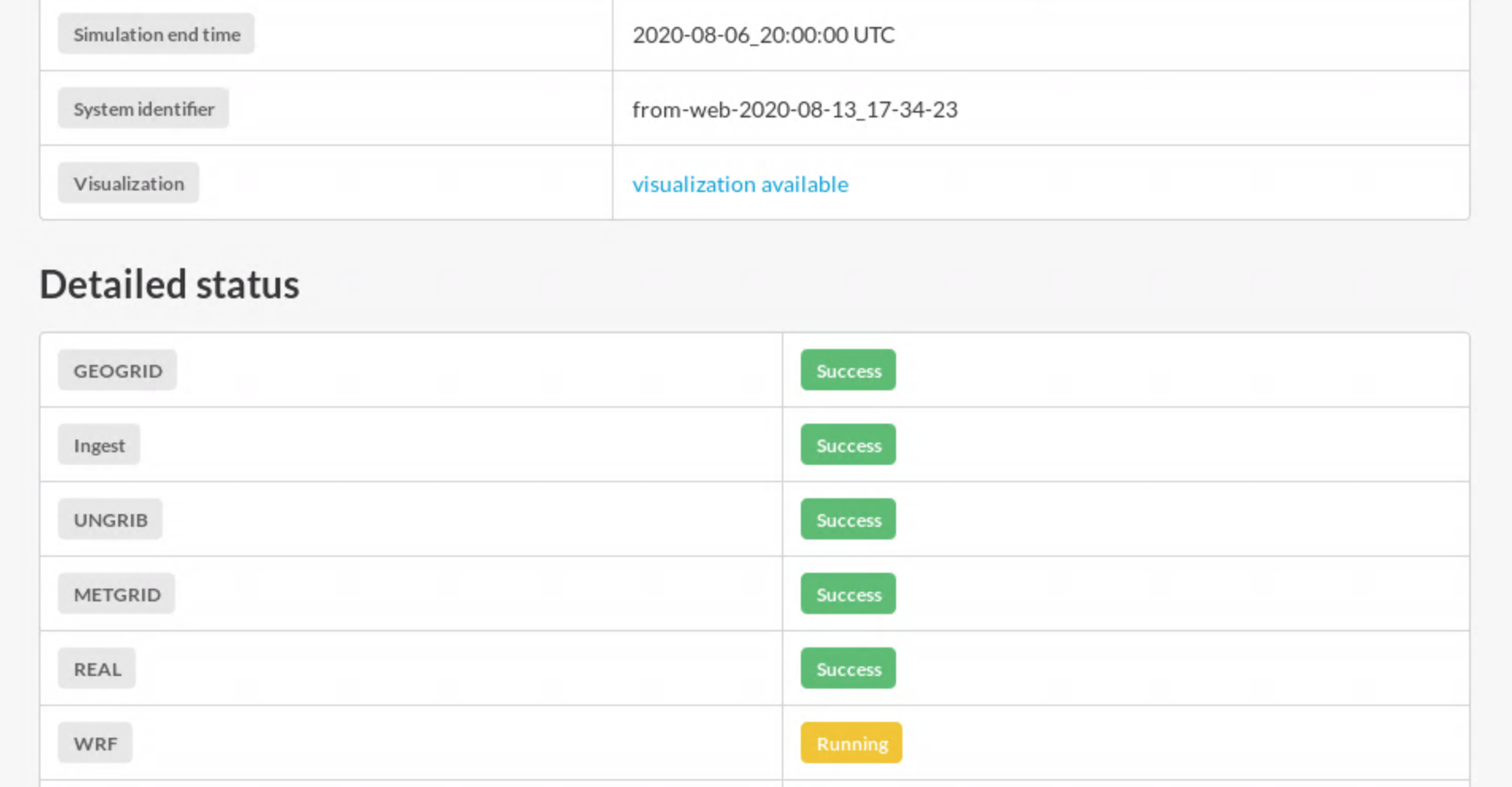

Monitoring page

At the beginning of the monitoring page, you will see a list of important information about the simulation (see figure below). After the information, there is a list of steps with their current status. The different possible statuses are:

- Waiting (grey): Represent that the process has not started and needs to wait for the other process. All the processes are initialized with this status.

- Running (yellow): Represent that the process is still running so in progress. All processes switch their status from Waiting to Running when they start running.

- Success (green): Represent that the process finished well. All processes switch their status from Running to Success when they finish running successfully.

- Available (green): Represent that some part is done and some other is still in progress. This status is only used by the Output process because the visualization is available once the process starts running.

- Failed (red): Represent that the process finished with a failure. All processes switch their status from Running to Failed when they finish running with a failure.

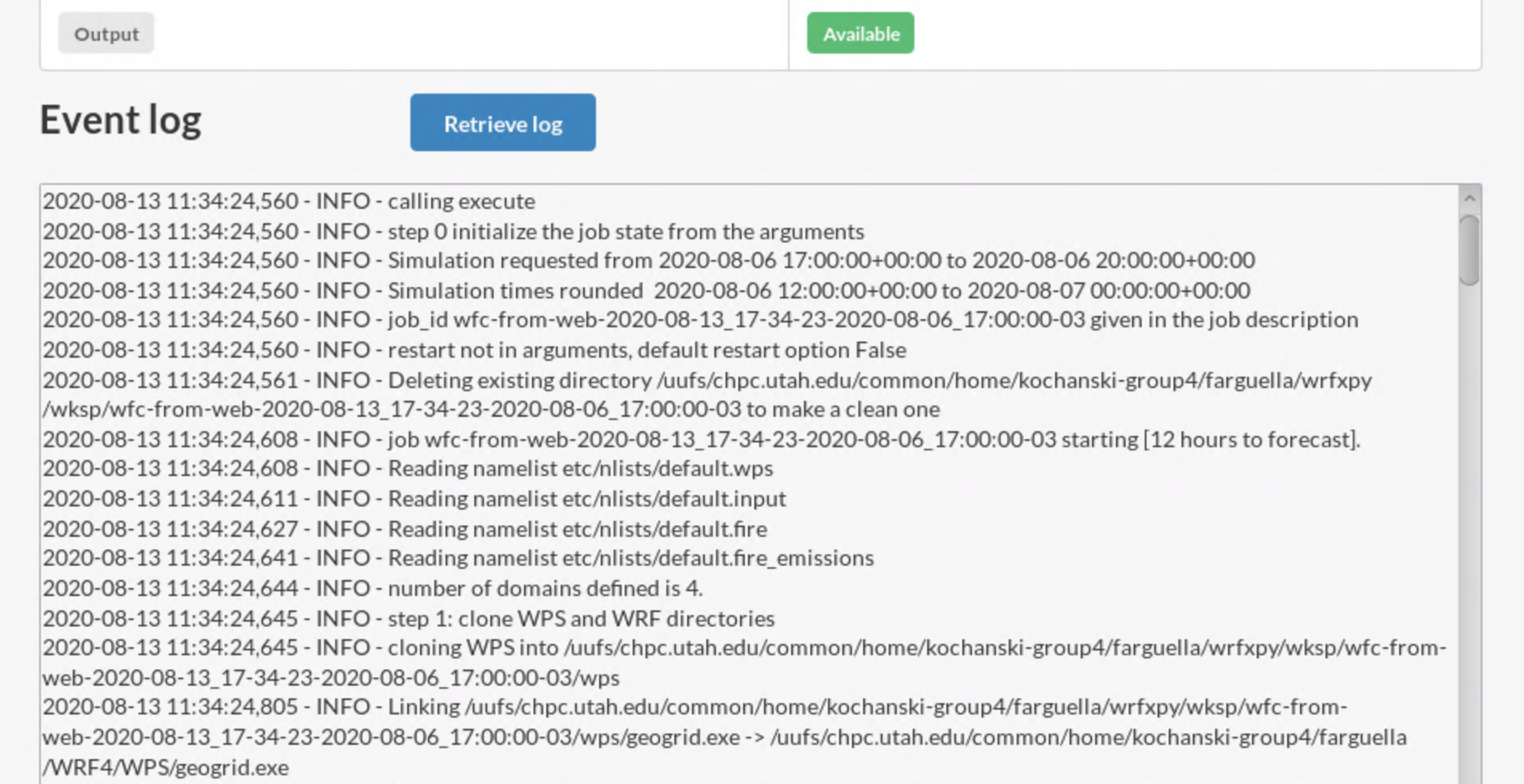

In the monitor page, the log file can be also retrieved clicking the Retrieve log button at the end of the page, which provides a scroll down window with the log file information.

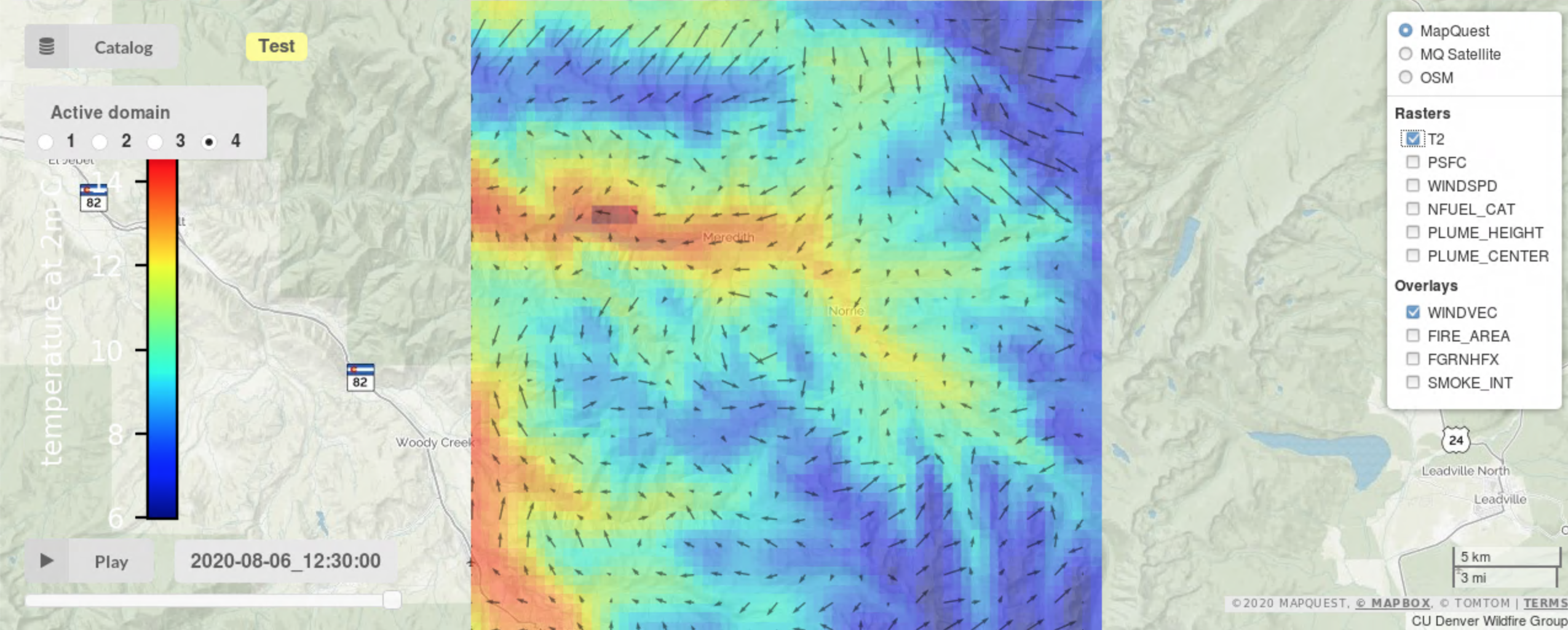

Finally, once the Output process becomes Available, in the Visualization element of the information section will appear a link to the simulation in the web server generated using wrfxweb. In this page, one can interactively plot the results in real-time while the simulation is still running

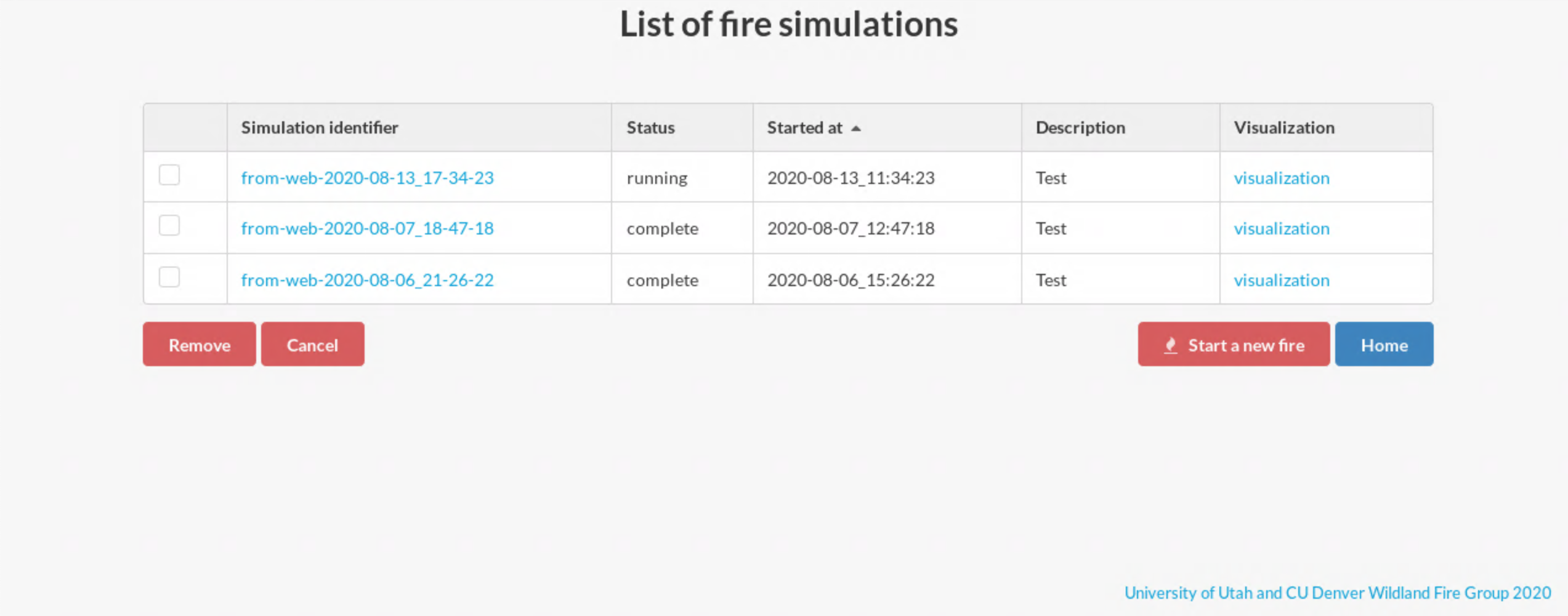

Overview page

From most of the previous pages, you can navigate to the current jobs which shows a list of jobs that are running and it allows the user to cancel or delete any simulation that has run or is running.