2019 Cluster Instructions

General Use Information

Logging In

The math-compute system uses (mostly) Ubuntu operating system. At this time, the main way of using the system is to use an SSH client to login to a terminal session on math-compute. You will need to be on the CU Denver network (wired or CU Denver wireless, not CU Denver Guest), or using the university's VPN client.

You will need a Math/CLAS account for research computing for login. This is separate from your CU Denver account.

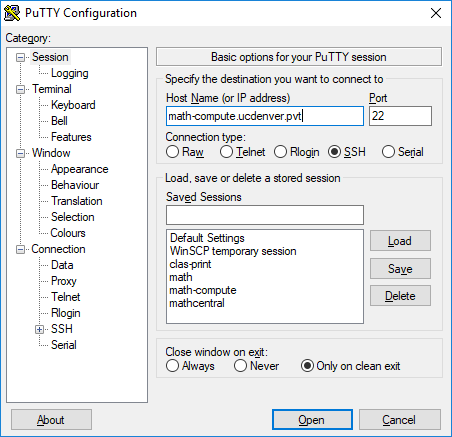

On Windows, you will need an SSH client. There are several out there, but generally most people use PuTTY which is available for download here. You can use either 32 or 64 bit versions - computers that require the 32 bit version are rather rare (early Windows 7/Vista/XP). The Host Name is math-compute.ucdenver.pvt.

You can also use the Windows 10 subsystem for Linux, where you install a Linux distribution as an app and can use it to ssh out like from a terminal window on any Linux machine. This is often more stable than Putty, which tends to get stuck on some computers.

On Macintosh, you can either use a specialized SSH client or Terminal which is built into the operating system. While in Finder on your Mac, press the Option, Shift, and U keys simultaneously. The Utilities folder should appear and the Terminal is within this folder. Open terminal and at the prompt type in:

ssh awesomeperson@math-compute.ucdenver.pvt

Whereas awesomeperson would be your research computing username. After connecting, it should ask for your research computing password and enter it at this point.

Science will then occur and you should be at the math-compute prompt and in your home directory.

Interactive Use

Using a server ‘interactively’ (aka not scheduling a job) is often needed for troubleshooting a job or just watching what it is doing in real time. After SSH’ing into math-compute, you can type ssh math-colibri-i01 or whatever server you want to go directly to the server. Please do not run anything directly on compute nodes, which are reserved for jobs under the control of the scheduler, even if you may be able to ssh there. These are nodes with names like math-colibri-c01 with the "c" before the number. Using compute nodes, where other people run jobs through the scheduler, will interfere with their work and make you very unpopular.

Using ‘screen’ is generally a good idea both math-compute or the interactive nodes. Basically was screen does is starts a virtual terminal inside your terminal. Sound confusing? It is. The plus of this virtual terminal is if you get disconnected, whatever you were running is still going.

screen –S ‘bananaphone’ (make the name whatever you want) creates a new terminal with that name

If you want to disconnect from the screen but leave it running, hit the combination of Control-A and press the D key to disconnect. Control-A is the combo to let screen know you want to do an action.

When you want to reconnect to the screen later, log back onto wherever you started the screen and type screen –r. If you have more than one screen, it’ll complain and tell you the screens you have available to reconnect to. screen –r ‘bananaphone’ to reconnect to that screen. Sometimes there is a number in front of the screen so screen –r 3128.bananaphone. It’ll tell you the number in the screen –r info screen.

File Storage

Home directories are on a shared server with 10TB total (right now). If you need to use gobs of data, please use one of the large disk arrays in /nfs/storage/math/. The main one is /nfs/storage/math/gross-s2. It’s getting full so don’t be surprised if it does run out of space. Let Joe know if it does.

For example, the mixtures project is in /nfs/storage/math/gross-s2/projects/mixtures. Sorry. It’s long but we have to stay organized somehow.

If you're a grad student and require more than 1TB of storage, please create a directory in /nfs/storage/math/gross-s2/gradprojects/ and store it there.

df –h will show you the storage arrays and how much space is available. There are different types of ‘empty’ space in linux so it may say there is plenty of space in df –h yet the array is full. It’s just a little funky in this type of environment.

Passwords

The system uses a CLAS shared logon server for research, so the logins are different from your normal UCDenver logon. If you need your password reset, contact Joe. Usually your username will be the same as your UCDenver one (we do that to simplify things).

Type passwd to change your password on math-compute.

Requesting information about the environment

Queues

There are queues for different departments on math-compute because it points to a central scheduler for all of CLAS. To see these queues type qstat –g c:

malingoj@math-compute:~$ qstat -g c

CLUSTER QUEUE CQLOAD USED RES AVAIL TOTAL aoACDS cdsuE

--------------------------------------------------------------------------------

chem-xenon 0.12 24 0 168 192 0 0

clas-pi 0.00 0 0 8 8 0 0

math-all 0.00 0 0 304 1080 0 776

math-colibri 0.00 0 0 224 384 0 160

math-gross 0.00 0 0 288 288 0 0

math-highmem 0.03 0 0 96 96 0 0

math-interactive 0.00 0 0 32 104 0 72

CQLOAD is the load of that queue /100 (so as you see above .12 is 12%)

USED is the number of slots currently used

RES is the number of slots reserved (usually 0)

AVAIL is the number of slots available

TOTAL is the number all together

aoACDS isn’t used

cdsuE are hosts that are either (d)isabled in the queue, or have (E)rror state/not connected

In most cases you’ll just want to use math-all unless you have special jobs. If your job needs extraordinary processing power or memory, please see Joe first so accommodations can be made.

math-colibri and math-score have uber high speed (10-40Gbit) interconnects so you can use MPI (Message Passing Interface) to run the nodes as a large 'supercomputer' where the nodes share CPU/memory between each node. Because of this, you obviously want to keep the jobs within each physical cluster, hence the need for math-colibri and math-score queues respectively.

math-highmem is for jobs that need large amounts of memory. The minimum amount of memory to be a highmem node right now is 96GB. If you need something with more than that, you probably want to run the job interactively.

math-interactive is a placeholder queue for watching resource usage. You shouldn’t use this for scheduling jobs - nothing will run.

math-grid is a collection of workstations around the department which can be used for general use jobs.

Hosts

There are lots of hosts (servers) in our system and you can view all of them by typing qhost at the prompt.

malingoj@math-compute:~$ qhost

HOSTNAME ARCH NCPU NSOC NCOR NTHR LOAD MEMTOT MEMUSE SWAPTO SWAPUS

----------------------------------------------------------------------------------------------

global - - - - - - - - - -

chem-xenon-c01 lx-amd64 32 2 16 32 0.01 251.4G 2.2G 4.0G 0.0

chem-xenon-c02 lx-amd64 32 2 16 32 0.03 251.4G 2.1G 4.0G 0.0

chem-xenon-c03 lx-amd64 32 2 16 32 7.90 251.4G 6.0G 4.0G 0.0

chem-xenon-c04 lx-amd64 32 2 16 32 14.95 251.4G 8.2G 4.0G 0.0

chem-xenon-c05 lx-amd64 32 2 16 32 0.03 251.4G 2.1G 4.0G 0.0

chem-xenon-c06 lx-amd64 32 2 16 32 0.01 251.4G 2.3G 4.0G 0.0

clas-pi-c01 lx-armhf 4 1 4 4 0.00 976.7M 61.4M 100.0M 0.0

clas-pi-c02 lx-armhf 4 1 4 4 0.00 976.7M 45.9M 100.0M 0.0

math-colibri-c01 lx-amd64 32 2 16 32 0.02 62.9G 1.4G 130.0G 0.0

math-colibri-c02 lx-amd64 32 2 16 32 0.11 62.9G 1.4G 130.0G 0.0

math-colibri-c03 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c04 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c05 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c06 lx-amd64 32 2 16 32 0.01 62.9G 1.4G 130.0G 0.0

math-colibri-c07 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c08 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c09 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c10 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c11 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c12 lx-amd64 32 2 16 32 0.15 62.9G 1.4G 130.0G 0.0

math-colibri-c13 - - - - - - - - - -

math-colibri-c14 - - - - - - - - - -

math-colibri-c15 - - - - - - - - - -

math-colibri-c16 - - - - - - - - - -

math-colibri-c17 - - - - - - - - - -

math-colibri-c18 - - - - - - - - - -

math-colibri-c19 - - - - - - - - - -

math-colibri-c20 - - - - - - - - - -

math-colibri-c21 - - - - - - - - - -

math-colibri-c22 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c23 lx-amd64 32 2 16 32 0.00 62.9G 1.4G 130.0G 0.0

math-colibri-c24 - - - - - - - - - -

math-colibri-i01 lx-amd64 64 4 32 64 3.00 1007.9G 8.0G 932.0G 0.0

math-compgen-c01 lx-amd64 4 1 4 4 0.00 3.7G 495.6M 34.0G 0.0

math-compgen-c02 lx-amd64 4 1 4 4 0.00 3.7G 497.2M 34.0G 0.0

math-compgen-c03 lx-amd64 4 1 4 4 0.00 3.7G 489.7M 34.0G 0.0

math-compgen-c04 lx-amd64 4 1 4 4 0.00 3.7G 496.4M 34.0G 0.0

math-gross-c01 lx-amd64 24 2 12 24 0.00 23.5G 948.4M 50.0G 0.0

math-gross-c02 lx-amd64 24 2 12 24 0.00 23.5G 953.5M 50.0G 0.0

math-gross-c03 lx-amd64 24 2 12 24 0.00 23.5G 954.2M 50.0G 0.0

math-gross-c04 lx-amd64 24 2 12 24 0.00 23.5G 941.8M 50.0G 0.0

math-gross-c05 lx-amd64 24 2 12 24 0.00 23.5G 946.8M 50.0G 0.0

math-gross-c06 lx-amd64 24 2 12 24 0.00 23.5G 942.1M 50.0G 0.0

math-gross-c07 lx-amd64 24 2 12 24 0.00 23.5G 1.0G 50.0G 0.0

math-gross-c08 lx-amd64 24 2 12 24 0.00 23.5G 958.7M 50.0G 0.0

math-gross-c09 lx-amd64 24 2 12 24 0.00 23.5G 844.3M 50.0G 0.0

math-gross-c10 lx-amd64 24 2 12 24 0.00 23.5G 862.1M 50.0G 0.0

math-gross-c11 lx-amd64 24 2 12 24 0.01 23.5G 939.4M 50.0G 0.0

math-gross-c12 lx-amd64 24 2 12 24 0.00 23.5G 844.9M 50.0G 0.0

math-gross-i01 lx-amd64 24 2 12 24 0.00 94.4G 1.0G 178.3G 0.0

math-gross-i02 lx-amd64 32 2 16 32 0.00 377.9G 1.1G 130.0G 0.0

math-turing - - - - - - - - - ?

As far as columns go:

NCPU is the number of logical CPUs

NSOC is the number of physical CPUs sockets

NCOR is the number of physical cores

NTHR is the number of threads

LOAD is the /100 load of the server

MEMTOT is the total physical memory

MEMUSE is the total physical memory used

SWAPTO is the total swap memory

SWAPUS is the total swap memory used

There are pros and cons of using more threads than physical cores (aka hyperthreading) and in some cases it helps and some it doesn’t. In general, it helps if you’re running a large multithreaded job on one machine, but slows things down if you’re using MPI or running a bunch of small single core jobs. For our purposes and limited budget, it’s usually hyperthreading enabled. Colibri is the main exception as it’s the main cluster for MPI jobs. math-colibri queue will only assign 16 slots per node even though it has 32 threads per node/host/server. When colibri is idle, we can enable colibri hosts into math-all and it will use all 32 threads. Easy answer? Just use math-all.

Swap memory is both a blessing and a curse. It’s basically using a slow hard drive (or array of hard drives) for extra memory. While this allows your job to continue to run without blowing up and failing, the CPU has to wait to snag data from that swap so things usually drop to a crawl if you start ‘swapping’. If something is taking forever and you ‘qhost’ and see it at full memory and into swap, let Joe know so he can try to fix it without killing your jobs.

It looks confusing but there is a method to the madness in the naming convention. Obviously, math-colibri and math-gross is the identifier for what cluster/building the servers are in, but the –c## and –i## stand for compute and interactive. the c## servers are usually part of the queuing system and the i## ones are for interactive use.

Scheduler Instructions

Submitting a job

The qsub command is used to submit a job into a queue. Your job should be a script that is accessible to the compute nodes. There are several switches you can add to the qsub command to set the submission options - the most common ones are:

|

-q [queuename] |

Submit the job into a certain queue (you should almost always do this) |

|

-pe [parallel environment] |

Submit the job with a specified parallel environment |

By default, a job will run with one "slot" (aka core reservation). If your job is going to use more than one CPU core, use the -pe smp XX where XX is the number of cores your job will consume. (16 in the example below)

Example:

qsub -q math-all -pe smp 16 ./awesomescript.sh

Viewing queues and job status

The qstat and qhost command are used to gather information from the scheduler. Some of the most common switches are:

|

-q [queuename] |

Request information on a certain queue. If you don't specify this option, all queues will be given |

|

-f |

Request full output - this is similar to giving a verbose output |

|

-u [username] |

Request list of jobs for a certain user. Use -u '*' to show all users. |

|

-j [jobid] |

Request information on a job. This is useful if your job throws an E (error) code. |

|

-g c |

Show status for all available queues - shows the used and available resources. |

The command qhost will show information on each server managed by the scheduler - mainly number of CPUs/Threads, total memory and total memory used, and total swap memory space and swap memory space used.

Modifying Jobs

The qmod and qalter commands are used to modify job settings or statuses.

The common qmod commands are:

|

-sj [jobid] |

suspend (pause) a job |

|

-usj [jobid] |

unsuspend (unpause) a job |

|

-rj [jobid] |

reschedule a job (restart and submit back in queue) |

|

-cj [jobid] |

clear job error state of a job |

The common qalter commands are:

|

-q [queuename] |

Change the job queue to the specified queue |

|

-pe [parallelenvironment] [slots] |

Change the parallel environment and/or number of slots used for the job |