Difference between revisions of "How to run WRF-SFIRE with real data"

| (137 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{ | + | {{historical|Running WRF-SFIRE with real data in the WRFx system}} |

| − | Running WRF- | + | Running WRF-SFIRE with real data is a process very similar to running WRF with real data for weather simulations. |

The [http://www.mmm.ucar.edu/wrf/users WRF users page] has many | The [http://www.mmm.ucar.edu/wrf/users WRF users page] has many | ||

| − | [ | + | [https://www2.mmm.ucar.edu/wrf/users/docs/user_guide_v4/contents.html documents] and |

[http://www2.mmm.ucar.edu/wrf/users/supports/tutorial.html tutorials] outlining this process. The purpose | [http://www2.mmm.ucar.edu/wrf/users/supports/tutorial.html tutorials] outlining this process. The purpose | ||

| − | of this page is to provide a tutorial for using real data with WRF- | + | of this page is to provide a tutorial for using real data with WRF-SFIRE starting from scratch. We begin with a quick outline of the |

| − | steps involved including links to the output of each step. The user can use these linked files to start from any step or to verify | + | steps involved including links to the output of each step. The user can use these linked files to start from any step or to verify their own results. Due to platform and compiler differences your output might differ slightly from those provided. |

| − | their own results. Due to platform and compiler differences your output might differ slightly from those provided. | ||

''This page refers to data sources for the USA only. For other countries, you will need to make appropriate modifications yourself.'' | ''This page refers to data sources for the USA only. For other countries, you will need to make appropriate modifications yourself.'' | ||

| Line 12: | Line 11: | ||

=Outline= | =Outline= | ||

| − | # [[How_to_get_WRF- | + | # [[How_to_get_WRF-SFIRE|Compile WRF-SFIRE source code]] with target em_real. |

| − | |||

# [[#Compiling WPS|Compile WPS]]. | # [[#Compiling WPS|Compile WPS]]. | ||

# [[#Configuring_the_domain|Configure your domain]]. | # [[#Configuring_the_domain|Configure your domain]]. | ||

# [[#Obtaining data for geogrid|Download geogrid datasets]]. | # [[#Obtaining data for geogrid|Download geogrid datasets]]. | ||

| − | # [[#Converting fire data| | + | # [[#Converting fire data|Converting fire data]]. |

# [[#Running geogrid|Run the geogrid executable]]. | # [[#Running geogrid|Run the geogrid executable]]. | ||

# [[#Obtaining atmospheric data|Download atmospheric data]]. | # [[#Obtaining atmospheric data|Download atmospheric data]]. | ||

| Line 26: | Line 24: | ||

=Compiling WPS= | =Compiling WPS= | ||

| − | After you have compiled WRF, | + | After you have compiled WRF-SFIRE, <code>git clone https://github.com/openwfm/WPS</code> at the same directory level as WRF-SFIRE, change to <code>WPS</code> and run |

<code>./configure</code>. This will present you with a list of configuration options similar to those given by WRF. | <code>./configure</code>. This will present you with a list of configuration options similar to those given by WRF. | ||

| − | You will need to chose one with the same compiler that you used to compile WRF. | + | You will need to chose one with the same compiler that you used to compile WRF-SFIRE. Generally, it is unnecessary to compile WPS with parallel support. |

| − | GRIB2 support is only necessary if your atmospheric data source requires it. | + | GRIB2 support is only necessary if your atmospheric data source requires it. Once you have chosen a configuration, you can compile with |

<pre>./compile >& compile.log</pre> | <pre>./compile >& compile.log</pre> | ||

Make sure to check for errors in the log file generated. | Make sure to check for errors in the log file generated. | ||

| Line 35: | Line 33: | ||

=Configuring the domain= | =Configuring the domain= | ||

| − | The physical domain is configured in the geogrid section of namelist.wps in the WPS directory. In this section, you should define | + | The physical domain is configured in the geogrid section of <tt>namelist.wps</tt> in the WPS directory. In this section, you should define |

the geographic projection with <tt>map_proj</tt>, <tt>truelat1</tt>, <tt>truelat2</tt>, and <tt>stand_lon</tt>. Available projections | the geographic projection with <tt>map_proj</tt>, <tt>truelat1</tt>, <tt>truelat2</tt>, and <tt>stand_lon</tt>. Available projections | ||

| − | include <tt>'lambert'</tt>, <tt>'polar'</tt>, <tt>'mercator'</tt>, and <tt>'lat-lon'</tt>. The | + | include <tt>'lambert'</tt>, <tt>'polar'</tt>, <tt>'mercator'</tt>, and <tt>'lat-lon'</tt>. The center of the coarse domain is located at <tt>ref_lon</tt> longitude and <tt>ref_lat</tt> latitude. The computational grid is defined by <tt>e_we/e_sn</tt>, the number of (staggered) grid points in the west-east/south-north direction, and the grid resolution is defined by <tt>dx</tt> and <tt>dy</tt> in meters. |

| − | <tt>ref_lon</tt> longitude and <tt>ref_lat</tt> latitude. The computational grid is defined by <tt>e_we/e_sn</tt>, the number of (staggered) grid | + | We also specify a path to where we will put the static dataset that geogrid will read from, and we specify the highest resolution (.3 arc seconds) that this data is released in. |

| − | points in the west-east/south-north direction, and the grid resolution is defined by <tt>dx</tt> and <tt>dy</tt> in meters. | ||

| − | We also specify a path to where we will put the static dataset that geogrid will read from, and we specify the highest resolution ( | ||

| − | data is released in. | ||

| − | + | <pre>&geogrid | |

| − | e_we = | + | e_we = 97, |

| − | e_sn = | + | e_sn = 97, |

| − | geog_data_res = ' | + | geog_data_res = '.3s', |

| − | dx = | + | dx = 100, |

| − | dy = | + | dy = 100, |

map_proj = 'lambert', | map_proj = 'lambert', | ||

| − | ref_lat | + | ref_lat = 39.728996 |

| − | ref_lon | + | ref_lon = -112.48999 |

| − | truelat1 | + | truelat1 = 39.5 |

| − | truelat2 | + | truelat2 = 39.9 |

| − | stand_lon = - | + | stand_lon = -112.8 |

| − | geog_data_path = '../ | + | geog_data_path = '../WPS_GEOG' |

| − | /</pre | + | /</pre> |

| − | The share section of the WPS namelist defines the fire subgrid refinement in <tt>subgrid_ratio_x</tt> and <tt>subgrid_ratio_y</tt>. This means | + | The share section of the WPS namelist defines the fire subgrid refinement in <tt>subgrid_ratio_x</tt> and <tt>subgrid_ratio_y</tt>. This means that the fire grid will be a 20 time refined grid at a resolution of 5 meters by 5 meters. The <tt>start_date</tt> and <tt>end_data</tt> parameters specify the time window that the simulation will be run in. Atmospheric data must be available at both temporal boundaries. The <tt>interval_seconds</tt> parameter tells WPS the number of seconds between each atmospheric dataset. For our example, we will be using the CFSR dataset which is released daily every six hours or 21,600 seconds. |

| − | that the fire grid will be a | ||

| − | the time window that the simulation will be run in. Atmospheric data must be available at both temporal boundaries. The <tt>interval_seconds</tt> | ||

| − | parameter tells WPS the number of seconds between each atmospheric dataset. For our example, we | ||

| − | will be using the | ||

| − | + | <pre>&share | |

wrf_core = 'ARW', | wrf_core = 'ARW', | ||

max_dom = 1, | max_dom = 1, | ||

| − | start_date = ' | + | start_date = '2018-09-08_00:00:00', |

| − | end_date = ' | + | end_date = '2018-09-08_06:00:00', |

| − | interval_seconds = | + | interval_seconds = 21600, |

io_form_geogrid = 2, | io_form_geogrid = 2, | ||

| − | subgrid_ratio_x = | + | subgrid_ratio_x = 20, |

| − | subgrid_ratio_y = | + | subgrid_ratio_y = 20, |

| − | /</pre | + | /</pre> |

| − | The full namelist used can be found [ | + | The full namelist used can be found in [https://pastebin.com/6rV2Qg8Y pastebin] or [https://home.chpc.utah.edu/~u6015636/wiki/namelist.wps namelist.wps]. |

=Obtaining data for geogrid= | =Obtaining data for geogrid= | ||

| − | First you must download and uncompress the standard [ | + | First, you must download and uncompress the standard [https://www2.mmm.ucar.edu/wrf/src/wps_files/geog_high_res_mandatory.tar.gz geogrid input data] as explained [https://www2.mmm.ucar.edu/wrf/users/download/get_sources_wps_geog.html here]. |

| − | This is a | + | This is a 2.6 GB compressed tarball that uncompresses to around 29 GB. It contains all of the static data that geogrid needs for a standard weather simulation; however, for a WRF-SFIRE simulation we need to fill in two additional fields that are too big to release in a single download for the whole globe. We first need to determine the approximate latitude and longitude bounds for our domain. |

| − | weather simulation; however, for a WRF- | ||

| − | whole globe. We first need to determine the approximate latitude and longitude bounds for our domain. | ||

| − | We know the coordinates in the | + | We know the coordinates in the center from the <tt>ref_lon</tt> and <tt>ref_lat</tt> parameters of the namelist. We can estimate the |

| − | coordinates of the upper right corner by the approximate ratio 9e-6 degrees per meter. So, the upper right | + | coordinates of the lower-left corner and upper-right corner by the approximate ratio 9e-6 degrees per meter. So, the lower-left and upper-right corners of our domain are at approximately |

| − | - | + | <pre>ref_lon ± (97-1)/2*100*9e-6 |

| − | - | + | ref_lat ± (97-1)/2*100*9e-6</pre> |

| + | Therefore for the purposes of downloading data, we will expand this region to the range -112.55 through -112.4 longitude and 39.65 through 39.8 latitude. | ||

==Downloading fuel category data== | ==Downloading fuel category data== | ||

| − | For the United States, Anderson 13 fuel category data is available at the [ | + | For the United States, Anderson 13 fuel category data is available at the [https://landfire.cr.usgs.gov/viewer/viewer.html Landfire] website. Upon opening the national map, click on the <tt>Download Tool</tt> [1] and you will see a menu on the right of the screen. Click on the <tt>LF 2016 Remap (LF_200)</tt>, then <tt>Fuel</tt>, and <tt>us_200 13 Fire Behavior Fuel Models-Anderson</tt> [2]. Finally, click on the <tt>Define Download Area By Coordinates</tt> button [3]. |

| − | click | + | |

| − | [[File: | + | [[File:Landfire_new1.png|700px|center]] |

<br style="clear: both" /> | <br style="clear: both" /> | ||

| − | This will open a window that lets you key in | + | |

| − | [[File: | + | This will open a new window on the right with a form that lets you key in the longitude and latitude range of your selection. In this window, we will input the coordinates computed earlier [4], and below we will click the <tt>Download Area</tt> button [5]. |

| + | |||

| + | [[File:Landfire_new2.png|700px|center]] | ||

| + | <br style="clear: both" /> | ||

| + | |||

| + | In the next window, click on the <tt>Modify</tt> button [6]. This will open a new window listing all of the available data products for the selected region. Make sure only the box next to <tt>US_200 13 Fire Behavior Fuel Models-Anderson</tt> is checked and change the data format from <tt>ArcGRID_with_attribs</tt> to <tt>GeoTIFF_with _attribs</tt>. At the bottom make sure <tt>Maximum size (MB) per piece:</tt> is set to 250. Then go to the bottom of the page and click <tt>Save Changes & Return to Summary</tt>. | ||

| + | [[File:Landfire_new3.png|700px|center]] | ||

<br style="clear: both" /> | <br style="clear: both" /> | ||

| − | + | ||

| − | + | Finally, click on the <tt>Download</tt> button [7]. The file will be a compressed archive containing, among others, a GeoTIFF file. The name of the file will be different for each request, but in this example we have [https://home.chpc.utah.edu/~u6015636/wiki/lf45409014_US_200FBFM13.zip lf45409014_US_200FBFM13.zip] containing the GeoTIFF file <tt>US_200FBFM13.tif</tt>, which can be found [[File:US_200FBFM13.tif]] or [https://home.chpc.utah.edu/~u6015636/wiki/US_200FBFM13.tif US_200FBFM13.tif]. | |

| − | [[File: | + | |

| + | [[File:Landfire_new4.png|700px|center]] | ||

<br style="clear: both" /> | <br style="clear: both" /> | ||

| − | |||

| − | |||

==Downloading high resolution elevation data== | ==Downloading high resolution elevation data== | ||

| − | + | For the United States, elevation data is also available at the [https://landfire.cr.usgs.gov/viewer/viewer.html Landfire] website. Repeat the steps described above for downloading the fuel data, but selecting instead <tt>Topographic</tt> and <tt>us_Elevation</tt>. | |

| − | + | ||

| − | [[File: | + | [[File:Landfire_new5.png|700px|center]] |

| + | |||

| + | Again, we key in the coordinates determined before and click the <tt>Download Area</tt> button. | ||

<br style="clear: both" /> | <br style="clear: both" /> | ||

| − | + | In the next window click again <tt>Modify</tt>, make sure only <tt>us_Elevation</tt> is selected, change the format to <tt>Geotiff</tt> and click <tt>Save Changes & Return to Summary</tt> | |

| − | to | + | |

| − | + | [[File:Landfire_new6.png|700px|center]] | |

| − | [[File: | + | |

<br style="clear: both" /> | <br style="clear: both" /> | ||

| + | In the next window, you should be able to click <tt>Download</tt> in order to download the GeoTIFF file containing topography. You will obtain the zip file [https://home.chpc.utah.edu/~u6015636/wiki/lf34682161_US_DEM2016.zip lf34682161_US_DEM2016.zip] containing a GeoTIFF file that can be downloaded from [[File:US_DEM2016.tif]] or [https://home.chpc.utah.edu/~u6015636/wiki/US_DEM2016.tif US_DEM2016.tif]. | ||

| − | =Converting | + | =Converting fire data= |

| − | This section describes converting data from geotiff to geogrid format | + | This section describes converting data from geotiff to geogrid format. |

| − | In order for geogrid to be able to read this data, we need to convert it into an intermediate format. | + | In order for geogrid to be able to read this data, we need to convert it into an intermediate format. We will be using a utility program included on [https://github.com/openwfm/wrfxpy wrfxpy] repository. For information on how to obtain and use this tool, see [[How_to_convert_data_for_Geogrid|How to convert data for Geogrid]]. We will go to the wrfxpy installation already obtained and move the geotiff files inside the directory. |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | To convert the fuel and elevation data, we will run | |

| − | + | ./convert_geotiff.sh US_200FBFM13.tif geo_data NFUEL_CAT | |

| − | + | ./convert_geotiff.sh US_DEM2016.tif geo_data ZSF | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | The resulting <tt>geo_data/NFUEL_CAT/index</tt> file is created as follows. | |

| − | + | projection = albers_nad83 | |

| − | + | dx = 30.0 | |

| − | + | dy = -30.0 | |

| − | + | truelat1 = 29.5 | |

| − | + | truelat2 = 45.5 | |

| − | + | stdlon = -96.0 | |

| − | + | known_x = 258.0 | |

| − | + | known_y = 313.0 | |

| − | + | known_lon = -112.47513542444187 | |

| + | known_lat = 39.725087912688274 | ||

| + | row_order = top_bottom | ||

| + | description = "Anderson 13 fire behavior categories" | ||

| + | units = "fuel category" | ||

| + | type = categorical | ||

| + | signed = yes | ||

| + | category_min = 0 | ||

| + | category_max = 14 | ||

| + | scale_factor = 1.0 | ||

| + | wordsize = 2 | ||

| + | tile_x = 515 | ||

| + | tile_y = 625 | ||

| + | tile_z = 1 | ||

| + | endian = little | ||

| − | + | We have chosen to set the word size to 1 byte because it can represent 256 categories, plenty for this purpose. Notice that the program has changed the number of categories to 14 and uses the last category to indicate that the source data was out of the range 1-13. For the fuel category data, this represents that there is no fuel present, due to a lake, river, road, etc. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | We can check that the projection information entered into the index file is correct, by running the <tt>gdalinfo</tt> binary that is installed with GDAL. In this case, <tt>gdalinfo</tt> tells us that the source file contains the following projection parameters. | |

| − | |||

| − | < | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Driver: GTiff/GeoTIFF | |

| − | + | Files: US_200FBFM13.tif | |

| − | + | Size is 515, 625 | |

| − | + | Coordinate System is: | |

| + | PROJCS["USA_Contiguous_Albers_Equal_Area_Conic_USGS_version", | ||

| + | GEOGCS["NAD83", | ||

| + | DATUM["North_American_Datum_1983", | ||

| + | SPHEROID["GRS 1980",6378137,298.2572221010042, | ||

| + | AUTHORITY["EPSG","7019"]], | ||

| + | AUTHORITY["EPSG","6269"]], | ||

| + | PRIMEM["Greenwich",0], | ||

| + | UNIT["degree",0.0174532925199433], | ||

| + | AUTHORITY["EPSG","4269"]], | ||

| + | PROJECTION["Albers_Conic_Equal_Area"], | ||

| + | PARAMETER["standard_parallel_1",29.5], | ||

| + | PARAMETER["standard_parallel_2",45.5], | ||

| + | PARAMETER["latitude_of_center",23], | ||

| + | PARAMETER["longitude_of_center",-96], | ||

| + | PARAMETER["false_easting",0], | ||

| + | PARAMETER["false_northing",0], | ||

| + | UNIT["metre",1, | ||

| + | AUTHORITY["EPSG","9001"]]] | ||

| + | Origin = (-1400235.000000000000000,1986555.000000000000000) | ||

| + | Pixel Size = (30.000000000000000,-30.000000000000000) | ||

| + | Metadata: | ||

| + | AREA_OR_POINT=Area | ||

| + | DataType=Thematic | ||

| + | Image Structure Metadata: | ||

| + | INTERLEAVE=BAND | ||

| + | Corner Coordinates: | ||

| + | Upper Left (-1400235.000, 1986555.000) (112d35' 1.88"W, 39d47'44.01"N) | ||

| + | Lower Left (-1400235.000, 1967805.000) (112d32'44.10"W, 39d37'50.78"N) | ||

| + | Upper Right (-1384785.000, 1986555.000) (112d24'16.21"W, 39d49' 9.72"N) | ||

| + | Lower Right (-1384785.000, 1967805.000) (112d21'59.86"W, 39d39'16.30"N) | ||

| + | Center (-1392510.000, 1977180.000) (112d28'30.49"W, 39d43'30.32"N) | ||

| + | Band 1 Block=128x128 Type=Int16, ColorInterp=Gray | ||

| + | NoData Value=-9999 | ||

| + | Metadata: | ||

| + | RepresentationType=THEMATIC | ||

| − | + | The resulting <tt>geo_data/ZSF/index</tt> file is created as follows. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | </ | ||

| − | + | projection = albers_nad83 | |

| + | dx = 30.0 | ||

| + | dy = -30.0 | ||

| + | truelat1 = 29.5 | ||

| + | truelat2 = 45.5 | ||

| + | stdlon = -96.0 | ||

| + | known_x = 258.0 | ||

| + | known_y = 313.0 | ||

| + | known_lon = -112.47513542444187 | ||

| + | known_lat = 39.725087912688274 | ||

| + | row_order = top_bottom | ||

| + | description = "National Elevation Dataset 1/3 arcsecond resolution" | ||

| + | units = "meters" | ||

| + | type = continuous | ||

| + | signed = yes | ||

| + | scale_factor = 1.0 | ||

| + | wordsize = 2 | ||

| + | tile_x = 515 | ||

| + | tile_y = 625 | ||

| + | tile_z = 1 | ||

| + | endian = little | ||

| + | |||

| + | Here we have used word size of 2 bytes and a scale factor of 1.0, which can represent any elevation in the world with 1-meter accuracy, which is approximately the accuracy of the source data. | ||

| + | |||

| + | Again, we compare the projection parameters in the index file with that reported by <tt>gdalinfo</tt> and find that the conversion was correct. | ||

| + | |||

| + | Driver: GTiff/GeoTIFF | ||

| + | Files: US_DEM2016.tif | ||

| + | Size is 515, 625 | ||

| + | Coordinate System is: | ||

| + | PROJCS["USA_Contiguous_Albers_Equal_Area_Conic_USGS_version", | ||

| + | GEOGCS["NAD83", | ||

| + | DATUM["North_American_Datum_1983", | ||

| + | SPHEROID["GRS 1980",6378137,298.2572221010042, | ||

| + | AUTHORITY["EPSG","7019"]], | ||

| + | AUTHORITY["EPSG","6269"]], | ||

| + | PRIMEM["Greenwich",0], | ||

| + | UNIT["degree",0.0174532925199433], | ||

| + | AUTHORITY["EPSG","4269"]], | ||

| + | PROJECTION["Albers_Conic_Equal_Area"], | ||

| + | PARAMETER["standard_parallel_1",29.5], | ||

| + | PARAMETER["standard_parallel_2",45.5], | ||

| + | PARAMETER["latitude_of_center",23], | ||

| + | PARAMETER["longitude_of_center",-96], | ||

| + | PARAMETER["false_easting",0], | ||

| + | PARAMETER["false_northing",0], | ||

| + | UNIT["metre",1, | ||

| + | AUTHORITY["EPSG","9001"]]] | ||

| + | Origin = (-1400235.000000000000000,1986555.000000000000000) | ||

| + | Pixel Size = (30.000000000000000,-30.000000000000000) | ||

| + | Metadata: | ||

| + | AREA_OR_POINT=Area | ||

| + | DataType=Thematic | ||

| + | Image Structure Metadata: | ||

| + | INTERLEAVE=BAND | ||

| + | Corner Coordinates: | ||

| + | Upper Left (-1400235.000, 1986555.000) (112d35' 1.88"W, 39d47'44.01"N) | ||

| + | Lower Left (-1400235.000, 1967805.000) (112d32'44.10"W, 39d37'50.78"N) | ||

| + | Upper Right (-1384785.000, 1986555.000) (112d24'16.21"W, 39d49' 9.72"N) | ||

| + | Lower Right (-1384785.000, 1967805.000) (112d21'59.86"W, 39d39'16.30"N) | ||

| + | Center (-1392510.000, 1977180.000) (112d28'30.49"W, 39d43'30.32"N) | ||

| + | Band 1 Block=128x128 Type=Int16, ColorInterp=Gray | ||

| + | NoData Value=-9999 | ||

| + | Metadata: | ||

| + | RepresentationType=THEMATIC | ||

| + | |||

| + | Finally, the converted data can be found here [https://home.chpc.utah.edu/~u6015636/wiki/geo_data.tar.gz geo_data.tar.gz]. | ||

=Running geogrid= | =Running geogrid= | ||

| − | The geogrid binary will create a | + | The geogrid binary will create a NetCDF file called <tt>geo_em.d01.nc</tt>. This file will contain all of the static data necessary to run your simulation. Before we can run the binary, however, we must tell geogrid what data needs to be in these files, where it can find them, and what kind of preprocessing we want to be done. This information is contained in a run-time configuration file called <tt>GEOGRID.TBL</tt>, which is located in the <tt>geogrid</tt> subdirectory. The file that is released with WPS contains reasonable defaults for the variables defined on the atmospheric grid, but we need to add two additional sections for the two fire grid data sets that we have just created. We will append the <tt>geo_data/GEOGRID.TBL</tt> sections to the file <tt>geogrid/GEOGRID.TBL</tt>. |

| − | of the static data necessary to run your simulation. Before we can run the binary, however, we must tell | + | =============================== |

| − | geogrid what data needs to be in these files, where it can find them, and what kind of preprocessing we | + | name = NFUEL_CAT |

| − | located in the <tt>geogrid</tt> subdirectory. | + | dest_type = categorical |

| − | for the variables defined on the atmospheric grid, but we need to add two additional sections for the two fire | + | interp_option = default:nearest_neighbor+average_16pt+search |

| − | grid data sets that we have just created. | + | abs_path = /absolute/path/to/geo_data/NFUEL_CAT |

| − | <tt>geogrid/GEOGRID.TBL</tt>. | + | priority = 1 |

| − | + | fill_missing = 14 | |

| − | name=NFUEL_CAT | + | subgrid = yes |

| − | + | dominant_only = NFUEL_CAT | |

| − | + | z_dim_name = fuel_cat | |

| − | + | halt_on_missing = no | |

| − | + | =============================== | |

| − | + | name = ZSF | |

| − | + | dest_type = continuous | |

| − | + | interp_option = default:average_gcell(4.0)+four_pt+average_4pt | |

| − | + | abs_path = /absolute/path/to/geo_data/ZSF | |

| − | ============================== | + | priority = 1 |

| − | name=ZSF | + | fill_missing = 0 |

| − | + | smooth_option = smth-desmth_special; smooth_passes=1 | |

| − | + | subgrid = yes | |

| − | + | df_dx = DZDXF | |

| − | + | df_dy = DZDYF | |

| − | + | halt_on_missing = no | |

| − | + | =============================== | |

| − | |||

| − | |||

| − | |||

| − | ============================== | ||

| − | |||

| − | |||

| − | |||

| − | + | For <tt>NFUEL_CAT</tt>, we will use simple nearest-neighbor interpolation, while for <tt>ZSF</tt>, we will use bilinear interpolation with smoothing. Other configurations are possible. See the [https://www2.mmm.ucar.edu/wrf/users/docs/user_guide_v4/v4.2/users_guide_chap3.html#_Description_of_GEOGRID.TBL WPS users guide] for further information. The full table used can be found [http://pastebin.com/kdymq5ff pastebin] or [https://home.chpc.utah.edu/~u6015636/wiki/GEOGRID.TBL GEOGRID.TBL]. | |

| − | |||

| − | |||

| − | [[ | ||

| − | <gallery caption="geo_em.d01.nc" widths=" | + | Once we make these changes to the <tt>GEOGRID.TBL</tt> file, and ensure that all of the directories are in the correct place (including the default geogrid dataset at <tt>../../WPS_GEOG</tt>), we can execute the geogrid binary. |

| − | File: | + | <pre>./geogrid.exe</pre> |

| − | File: | + | This will create a file called <tt>geo_em.d01.nc</tt> in the current directory, which can be found here, [https://home.chpc.utah.edu/~u6015636/wiki/geogrid_output.tar.gz geogrid_output.tar.gz]. The contents of this file can be viewed using your favorite NetCDF viewer. |

| − | File: | + | |

| + | <center> | ||

| + | <gallery caption="geo_em.d01.nc" widths="250px" heights="250px" perrow="3" class="center"> | ||

| + | File:Nfuel_cat_new.png|The fuel category data interpolated to the model grid.The | ||

| + | File:Zsf_new.png|The high resolution elevation (1/3") data interpolated to the model grid. | ||

| + | File:Hgt_m_new.png|The low resolution elevation data (30") data interpolated to the atmospheric grid | ||

</gallery> | </gallery> | ||

| + | </center> | ||

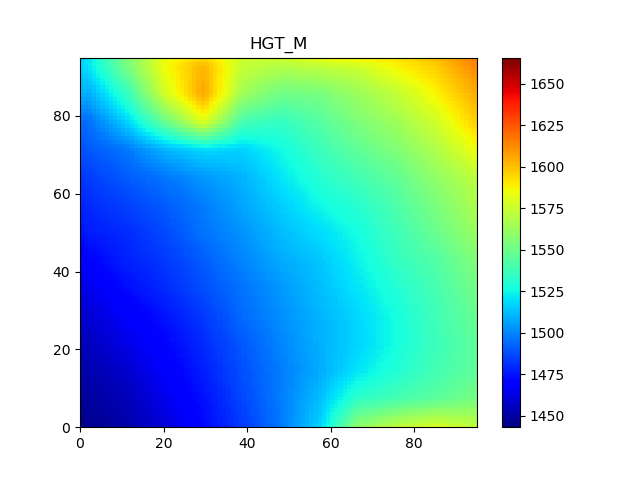

Here, we have visualized the fire grid variables, <tt>NFUEL_CAT</tt> and <tt>ZSF</tt>, as well as the | Here, we have visualized the fire grid variables, <tt>NFUEL_CAT</tt> and <tt>ZSF</tt>, as well as the | ||

variable <tt>HGT_M</tt>, which is the elevation data used by the atmospheric model. We can compare | variable <tt>HGT_M</tt>, which is the elevation data used by the atmospheric model. We can compare | ||

| Line 297: | Line 316: | ||

There are a number of datasets available to initialize a WRF real run. The | There are a number of datasets available to initialize a WRF real run. The | ||

| − | [ | + | [https://www2.mmm.ucar.edu/wrf/users/download/free_data.html WRF users page] lists |

a few. One challenge in running a fire simulation is finding a dataset of | a few. One challenge in running a fire simulation is finding a dataset of | ||

sufficient resolution. One (relatively) high resolution data source is the | sufficient resolution. One (relatively) high resolution data source is the | ||

| − | + | Climate Forecast System (CFS). This is still only 56 km resolution, so | |

no small scale weather patterns will appear in our simulation. In general, we | no small scale weather patterns will appear in our simulation. In general, we | ||

will want to run a series of nested domains in order to catch some small scale weather | will want to run a series of nested domains in order to catch some small scale weather | ||

features; however, we will proceed with a single domain example. | features; however, we will proceed with a single domain example. | ||

| − | The | + | The CFSR datasets are available at the following website, |

| − | [ | + | [https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis]. |

| − | We will browse to the [ | + | We will browse to the [https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis/6-hourly-by-pressure/2018/201809/20180908/ pressure] and [https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis/6-hourly-flux/2018/201809/20180908/ surface] directory |

| − | containing the data for | + | containing the data for September 08, 2018. Our simulation runs from the hours 00-06 on this |

| − | day, so we will download the grib files for hours | + | day, so we will download the pressure grib files for hours |

| − | [ | + | [https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis/6-hourly-by-pressure/2018/201809/20180908/cdas1.t00z.pgrbh00.grib2 00] and |

| − | [ | + | [https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis/6-hourly-by-pressure/2018/201809/20180908/cdas1.t06z.pgrbh00.grib2 06], and the surface grib files for hours [https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis/6-hourly-flux/2018/201809/20180908/cdas1.t00z.sfluxgrbf00.grib2 00] and |

| − | You can get these files also from here, [ | + | [https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis/6-hourly-flux/2018/201809/20180908/cdas1.t06z.sfluxgrbf00.grib2 06]. |

| + | |||

| + | You can get these files also from here, [https://home.chpc.utah.edu/~u6015636/wiki/CFSR_20180908_00-06.tar.gz CFSR_20180908_00-06.tar.gz]. | ||

=Running ungrib= | =Running ungrib= | ||

| − | With the grib files downloaded, we need to link | + | With the grib files downloaded, we need to process them separately for pressure and surface variables. We need to link the pressure GRIB files into the WPS directory using the script <tt>link_grib.csh</tt>. This script takes as arguments all of the grib files that are needed for the simulation. In this case, we can run the following command in the WPS directory. |

| − | <tt>link_grib.csh</tt>. | + | <pre>./link_grib.csh <path to>/CFSR_20180908_00-06/pressure/*.grib2</pre> |

| − | for the simulation. | ||

| − | |||

Substitute <path to> with the directory in which you have saved the grib files. This command | Substitute <path to> with the directory in which you have saved the grib files. This command | ||

creates a series of symbolic links with a predetermined naming sequence to all of the grib files | creates a series of symbolic links with a predetermined naming sequence to all of the grib files | ||

| Line 325: | Line 344: | ||

<tt>GRIBFILE.AAB</tt>. | <tt>GRIBFILE.AAB</tt>. | ||

| − | With the proper links in place, we need to tell ungrib what they contain. | + | With the proper links in place, we need to tell ungrib what they contain. This is done by copying a variable table into the main WPS directory. Several variable tables are distributed with WPS which describe common datasets. You can find these in the directory <tt>WPS/ungrib/Variable_Tables</tt>. |

| − | describe common datasets. You can find these in the directory <tt>WPS/ungrib/Variable_Tables</tt>. | + | In particular, the file which corresponds to the CFSR grib files is called <tt>Vtable.CFSR</tt>, so |

| − | In particular, the file which corresponds to the | ||

we issue the following command to copy it into the current directory. | we issue the following command to copy it into the current directory. | ||

| − | + | <pre>cp ungrib/Variable_Tables/Vtable.CFSR Vtable</pre> | |

We are now ready to run the ungrib executable. | We are now ready to run the ungrib executable. | ||

| − | < | + | <pre>./ungrib.exe</pre> |

| − | This will create two files in the current directory named <tt> | + | This will create two files in the current directory named <tt>COLMET:2018-09-08_00</tt> and <tt>COLMET:2018-09-08_06</tt>. We need to change their name before processing surface variables. So |

| + | <pre>mv COLMET:2018-09-08_00 COLMET_P:2018-09-08_00 | ||

| + | mv COLMET:2018-09-08_06 COLMET_P:2018-09-08_06</pre> | ||

| + | and remove the <tt>GRIBFILE.*</tt> files doing | ||

| + | <pre>rm GRIBFILE.*</pre> | ||

| + | |||

| + | Now we can start over for processing surface variables | ||

| + | <pre>./link_grib.csh <path to>/CFSR_20180908_00-06/surface/*.grib2</pre> | ||

| + | Substitute <path to> with the directory in which you have saved the grib files. You should now have two new soft links named <tt>GRIBFILE.AAA</tt> and <tt>GRIBFILE.AAB</tt>. | ||

| + | We are now ready to run the ungrib executable again. | ||

| + | <pre>./ungrib.exe</pre> | ||

| + | This will create two files in the current directory named <tt>COLMET:2018-09-08_00</tt> and <tt>COLMET:2018-09-08_06</tt>. We need to change their name. So | ||

| + | <pre>mv COLMET:2018-09-08_00 COLMET_S:2018-09-08_00 | ||

| + | mv COLMET:2018-09-08_06 COLMET_S:2018-09-08_06</pre> | ||

| + | The four files <tt>COLMET_P:2018-09-08_00</tt>, <tt>COLMET_P:2018-09-08_06</tt>, <tt>COLMET_S:2018-09-08_00</tt>, and <tt>COLMET_S:2018-09-08_06</tt> are the resulting files which can be downloaded here, [https://home.chpc.utah.edu/~u6015636/wiki/ungrib_output.tar.gz ungrib_output.tar.gz]. | ||

=Running metgrid= | =Running metgrid= | ||

Metgrid will take the files created by ungrib and geogrid and combine them into a set of files. At this point, all we need to do is run it. | Metgrid will take the files created by ungrib and geogrid and combine them into a set of files. At this point, all we need to do is run it. | ||

| − | + | <pre>./metgrid.exe</pre> | |

| − | This creates two files named <tt>met_em.d01. | + | This creates two files named <tt>met_em.d01.2018-09-08_00:00:00.nc</tt> and <tt>met_em.d01.2018-09-08_06:00:00.nc</tt>, which you can download here, [https://home.chpc.utah.edu/~u6015636/wiki/metgrid_output.tar.gz metgrid_output.tar.gz]. |

| − | =Running | + | =Running WRF-SFIRE= |

We are now finished with all steps involving WPS. All we need to do is copy over the metgrid output | We are now finished with all steps involving WPS. All we need to do is copy over the metgrid output | ||

| − | files over to our WRF real run directory at <tt> | + | files over to our WRF real run directory at <tt>WRF-SFIRE/test/em_real</tt> and configure our WRF namelist. |

We will need to be sure that the domain description in <tt>namelist.input</tt> matches that of | We will need to be sure that the domain description in <tt>namelist.input</tt> matches that of | ||

the <tt>namelist.wps</tt> we created previously, otherwise WRF will refuse to run. Pay particular attention | the <tt>namelist.wps</tt> we created previously, otherwise WRF will refuse to run. Pay particular attention | ||

to the start/stop times and the grid sizes. The fire ignition parameters are configured | to the start/stop times and the grid sizes. The fire ignition parameters are configured | ||

in the same way as for the ideal case. Relevant portion of the namelist we will use are given below. | in the same way as for the ideal case. Relevant portion of the namelist we will use are given below. | ||

| − | + | <pre>&time_control | |

| − | + | run_days = 0 | |

| − | + | run_hours = 6 | |

| − | + | run_minutes = 0 | |

| − | + | run_seconds = 0 | |

| − | + | start_year = 2018 | |

| − | + | start_month = 9 | |

| − | + | start_day = 8 | |

| − | + | start_hour = 0 | |

| − | + | start_minute = 0 | |

| − | + | start_second = 0 | |

| − | + | end_year = 2018 | |

| − | + | end_month = 9 | |

| − | + | end_day = 8 | |

| − | + | end_hour = 6 | |

| − | + | end_minute = 0 | |

| − | + | end_second = 0 | |

| − | + | interval_seconds = 21600 | |

| − | + | input_from_file = .true. | |

| − | + | history_interval = 30 | |

| − | + | frames_per_outfile = 1000 | |

| − | + | restart = .false. | |

| − | + | restart_interval = 180 | |

| − | + | io_form_history = 2 | |

| − | + | io_form_restart = 2 | |

| − | + | io_form_input = 2 | |

| − | + | io_form_boundary = 2 | |

| − | + | debug_level = 1 | |

| + | / | ||

| − | + | &domains | |

| − | + | time_step = 0 | |

| − | + | time_step_fract_num = 1 | |

| − | + | time_step_fract_den = 2 | |

| − | + | max_dom = 1 | |

| − | + | s_we = 1 | |

| − | + | e_we = 97 | |

| − | + | s_sn = 1 | |

| − | + | e_sn = 97 | |

| − | + | s_vert = 1 | |

| − | + | e_vert = 41 | |

| − | + | num_metgrid_levels = 38 | |

| − | + | num_metgrid_soil_levels = 4 | |

| − | + | dx = 100 | |

| − | + | dy = 100 | |

| − | + | grid_id = 1 | |

| − | + | parent_id = 1 | |

| − | + | i_parent_start = 1 | |

| − | + | j_parent_start = 1 | |

| − | + | parent_grid_ratio = 1 | |

| − | + | parent_time_step_ratio = 1 | |

| − | + | feedback = 1 | |

| − | + | smooth_option = 0 | |

| − | + | sr_x = 20 | |

| − | + | sr_y = 20 | |

| − | + | sfcp_to_sfcp = .true. | |

| − | + | p_top_requested = 10000 | |

| + | / | ||

| − | + | &bdy_control | |

| − | + | spec_bdy_width = 5 | |

| − | + | spec_zone = 1 | |

| − | + | relax_zone = 4 | |

| − | + | specified = .true. | |

| − | + | periodic_x = .false. | |

| − | + | symmetric_xs = .false. | |

| − | + | symmetric_xe = .false. | |

| − | + | open_xs = .false. | |

| − | + | open_xe = .false. | |

| − | + | periodic_y = .false. | |

| − | + | symmetric_ys = .false. | |

| − | + | symmetric_ye = .false. | |

| − | + | open_ys = .false. | |

| − | + | open_ye = .false. | |

| − | + | nested = .false. | |

| − | + | /</pre> | |

| − | </ | + | It is worth mentioning the different <tt>ifire</tt> options implemented: |

| − | The full namelist used can be found [ | + | * <tt>ifire = 1</tt>: WRF-SFIRE code up to date |

| + | * <tt>ifire = 2</tt>: Fire code from 2012 in WRF with changes at NCAR | ||

| + | Visit [https://github.com/openwfm/WRF-SFIRE/blob/master/README-SFIRE.md README-SFIRE.md] for more details. | ||

| + | |||

| + | The full namelist used can be found [https://pastebin.com/V0kGcuS5 pastebin] or [https://home.chpc.utah.edu/~u6015636/wiki/namelist.input namelist.input]. | ||

Once the namelist is properly configured we run the WRF real preprocessor. | Once the namelist is properly configured we run the WRF real preprocessor. | ||

| − | + | <pre>./real.exe</pre> | |

This creates the initial and boundary files for the WRF simulation and fills all missing fields | This creates the initial and boundary files for the WRF simulation and fills all missing fields | ||

from the grib data with reasonable defaults. The files that it produces are <tt>wrfbdy_d01</tt> | from the grib data with reasonable defaults. The files that it produces are <tt>wrfbdy_d01</tt> | ||

| − | and <tt>wrfinput_d01</tt>, which can be downloaded here [ | + | and <tt>wrfinput_d01</tt>, which can be downloaded here, [https://home.chpc.utah.edu/~u6015636/wiki/wrf_real_output.tar.gz wrf_real_output.tar.gz]. |

| + | To prepare for running the fire model, copy its parameters here: | ||

| + | <pre> | ||

| + | cp ../em_fire/hill/namelist.fire . | ||

| + | cp ../em_fire/hill/namelist.fire_emissions . | ||

| + | </pre> | ||

Finally, we run the simulation. | Finally, we run the simulation. | ||

| − | + | <pre>./wrf.exe</pre> | |

| − | The history file for this example can be downloaded here, [ | + | The history file for this example can be downloaded here, [https://home.chpc.utah.edu/~u6015636/wiki/wrf_real_history.tar.gz wrf_real_history.tar.gz]. |

[[Category:WRF-Fire]] | [[Category:WRF-Fire]] | ||

[[Category:Data]] | [[Category:Data]] | ||

| − | [[Category:Howtos|Run WRF- | + | [[Category:Howtos|Run WRF-SFIRE with real data]] |

Latest revision as of 23:57, 2 October 2023

- This is an archived historical WRF-Fire/WRF-SFIRE page. Please go to Running WRF-SFIRE with real data in the WRFx system for the current software version.

Running WRF-SFIRE with real data is a process very similar to running WRF with real data for weather simulations. The WRF users page has many documents and tutorials outlining this process. The purpose of this page is to provide a tutorial for using real data with WRF-SFIRE starting from scratch. We begin with a quick outline of the steps involved including links to the output of each step. The user can use these linked files to start from any step or to verify their own results. Due to platform and compiler differences your output might differ slightly from those provided.

This page refers to data sources for the USA only. For other countries, you will need to make appropriate modifications yourself.

Outline

- Compile WRF-SFIRE source code with target em_real.

- Compile WPS.

- Configure your domain.

- Download geogrid datasets.

- Converting fire data.

- Run the geogrid executable.

- Download atmospheric data.

- Run the ungrib executable.

- Run the metgrid executable.

- Run real.exe and wrf.exe.

Compiling WPS

After you have compiled WRF-SFIRE, git clone https://github.com/openwfm/WPS at the same directory level as WRF-SFIRE, change to WPS and run

./configure. This will present you with a list of configuration options similar to those given by WRF.

You will need to chose one with the same compiler that you used to compile WRF-SFIRE. Generally, it is unnecessary to compile WPS with parallel support.

GRIB2 support is only necessary if your atmospheric data source requires it. Once you have chosen a configuration, you can compile with

./compile >& compile.log

Make sure to check for errors in the log file generated.

Configuring the domain

The physical domain is configured in the geogrid section of namelist.wps in the WPS directory. In this section, you should define the geographic projection with map_proj, truelat1, truelat2, and stand_lon. Available projections include 'lambert', 'polar', 'mercator', and 'lat-lon'. The center of the coarse domain is located at ref_lon longitude and ref_lat latitude. The computational grid is defined by e_we/e_sn, the number of (staggered) grid points in the west-east/south-north direction, and the grid resolution is defined by dx and dy in meters. We also specify a path to where we will put the static dataset that geogrid will read from, and we specify the highest resolution (.3 arc seconds) that this data is released in.

&geogrid e_we = 97, e_sn = 97, geog_data_res = '.3s', dx = 100, dy = 100, map_proj = 'lambert', ref_lat = 39.728996 ref_lon = -112.48999 truelat1 = 39.5 truelat2 = 39.9 stand_lon = -112.8 geog_data_path = '../WPS_GEOG' /

The share section of the WPS namelist defines the fire subgrid refinement in subgrid_ratio_x and subgrid_ratio_y. This means that the fire grid will be a 20 time refined grid at a resolution of 5 meters by 5 meters. The start_date and end_data parameters specify the time window that the simulation will be run in. Atmospheric data must be available at both temporal boundaries. The interval_seconds parameter tells WPS the number of seconds between each atmospheric dataset. For our example, we will be using the CFSR dataset which is released daily every six hours or 21,600 seconds.

&share wrf_core = 'ARW', max_dom = 1, start_date = '2018-09-08_00:00:00', end_date = '2018-09-08_06:00:00', interval_seconds = 21600, io_form_geogrid = 2, subgrid_ratio_x = 20, subgrid_ratio_y = 20, /

The full namelist used can be found in pastebin or namelist.wps.

Obtaining data for geogrid

First, you must download and uncompress the standard geogrid input data as explained here. This is a 2.6 GB compressed tarball that uncompresses to around 29 GB. It contains all of the static data that geogrid needs for a standard weather simulation; however, for a WRF-SFIRE simulation we need to fill in two additional fields that are too big to release in a single download for the whole globe. We first need to determine the approximate latitude and longitude bounds for our domain.

We know the coordinates in the center from the ref_lon and ref_lat parameters of the namelist. We can estimate the coordinates of the lower-left corner and upper-right corner by the approximate ratio 9e-6 degrees per meter. So, the lower-left and upper-right corners of our domain are at approximately

ref_lon ± (97-1)/2*100*9e-6 ref_lat ± (97-1)/2*100*9e-6

Therefore for the purposes of downloading data, we will expand this region to the range -112.55 through -112.4 longitude and 39.65 through 39.8 latitude.

Downloading fuel category data

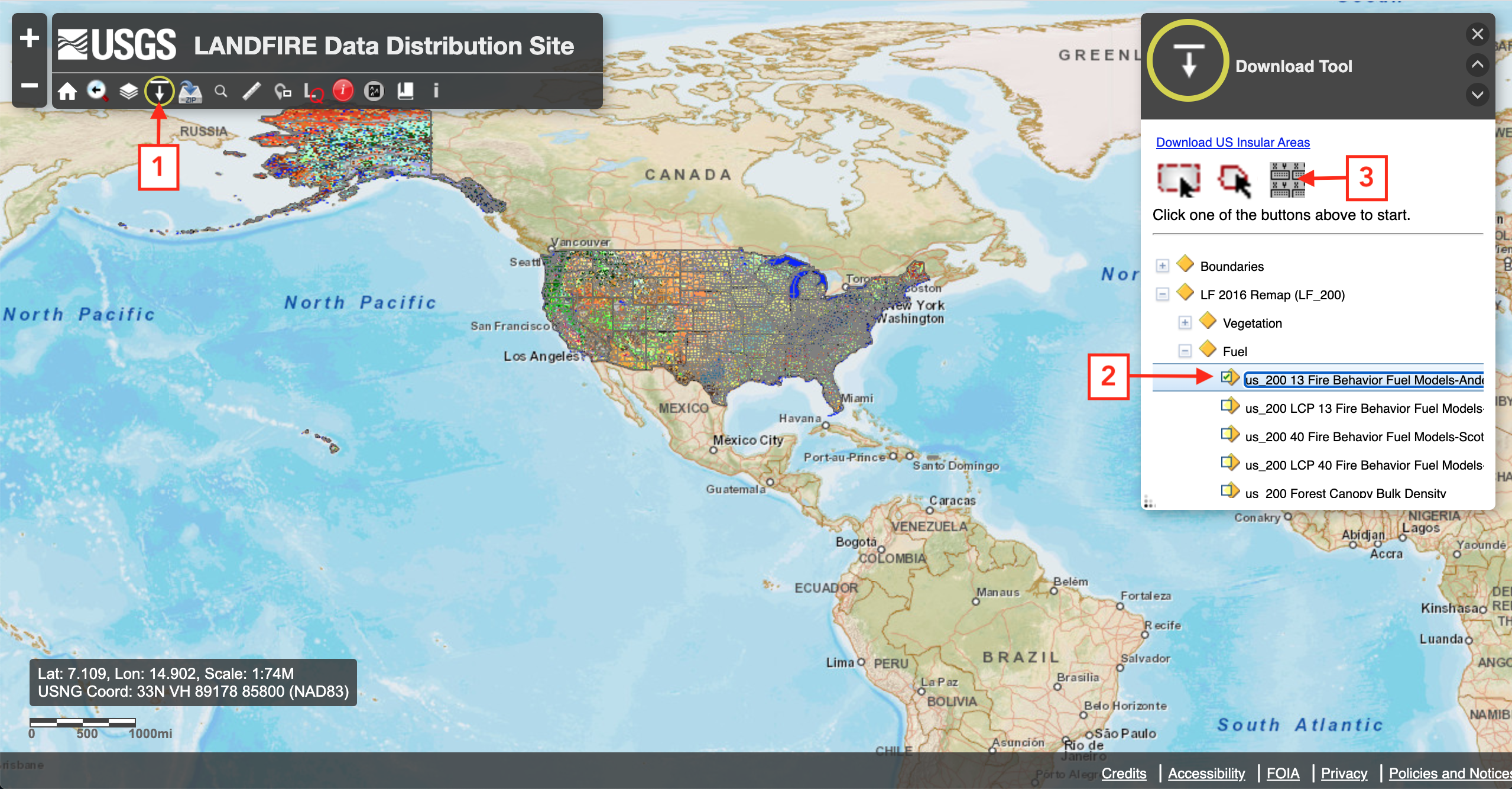

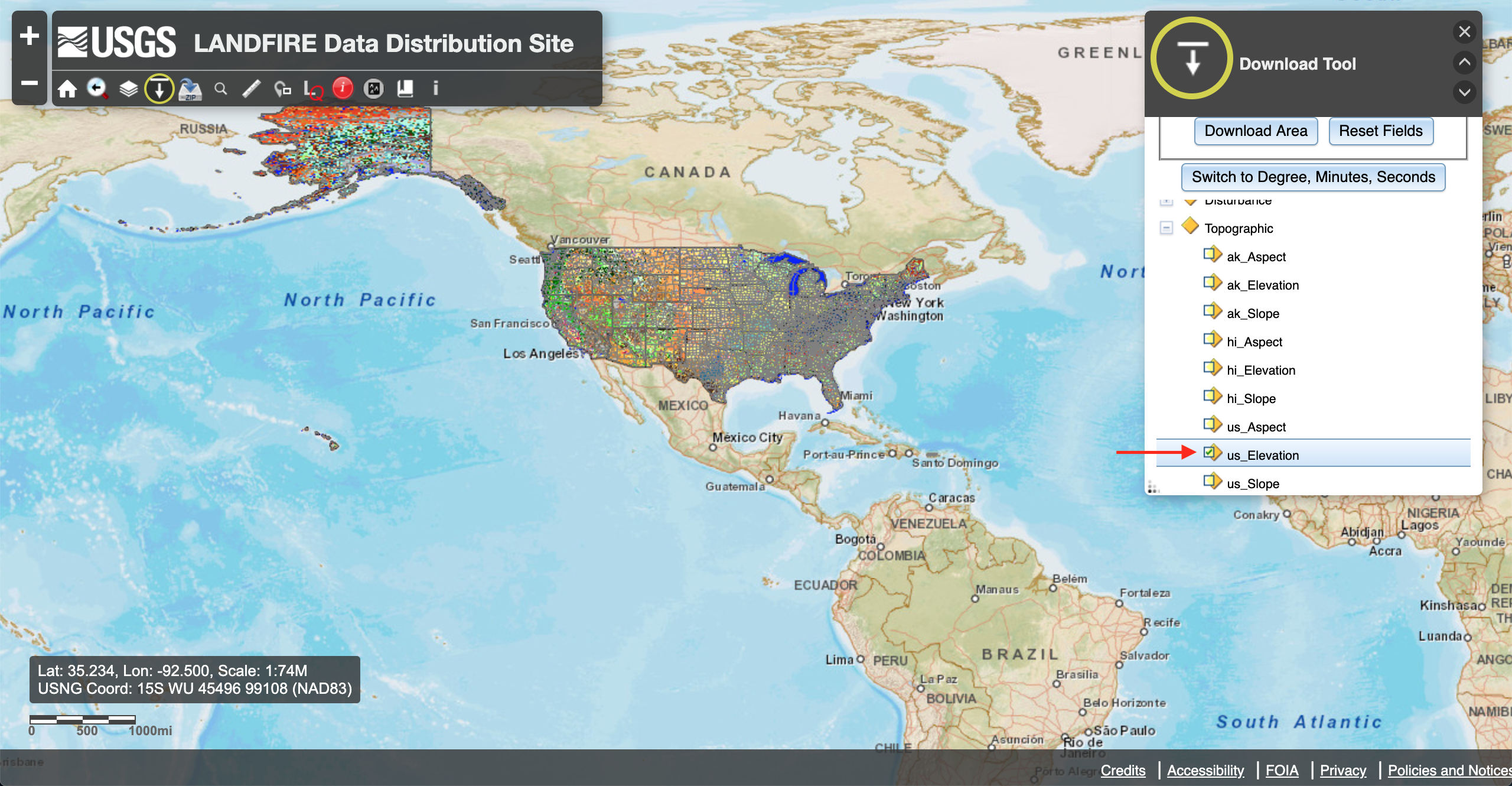

For the United States, Anderson 13 fuel category data is available at the Landfire website. Upon opening the national map, click on the Download Tool [1] and you will see a menu on the right of the screen. Click on the LF 2016 Remap (LF_200), then Fuel, and us_200 13 Fire Behavior Fuel Models-Anderson [2]. Finally, click on the Define Download Area By Coordinates button [3].

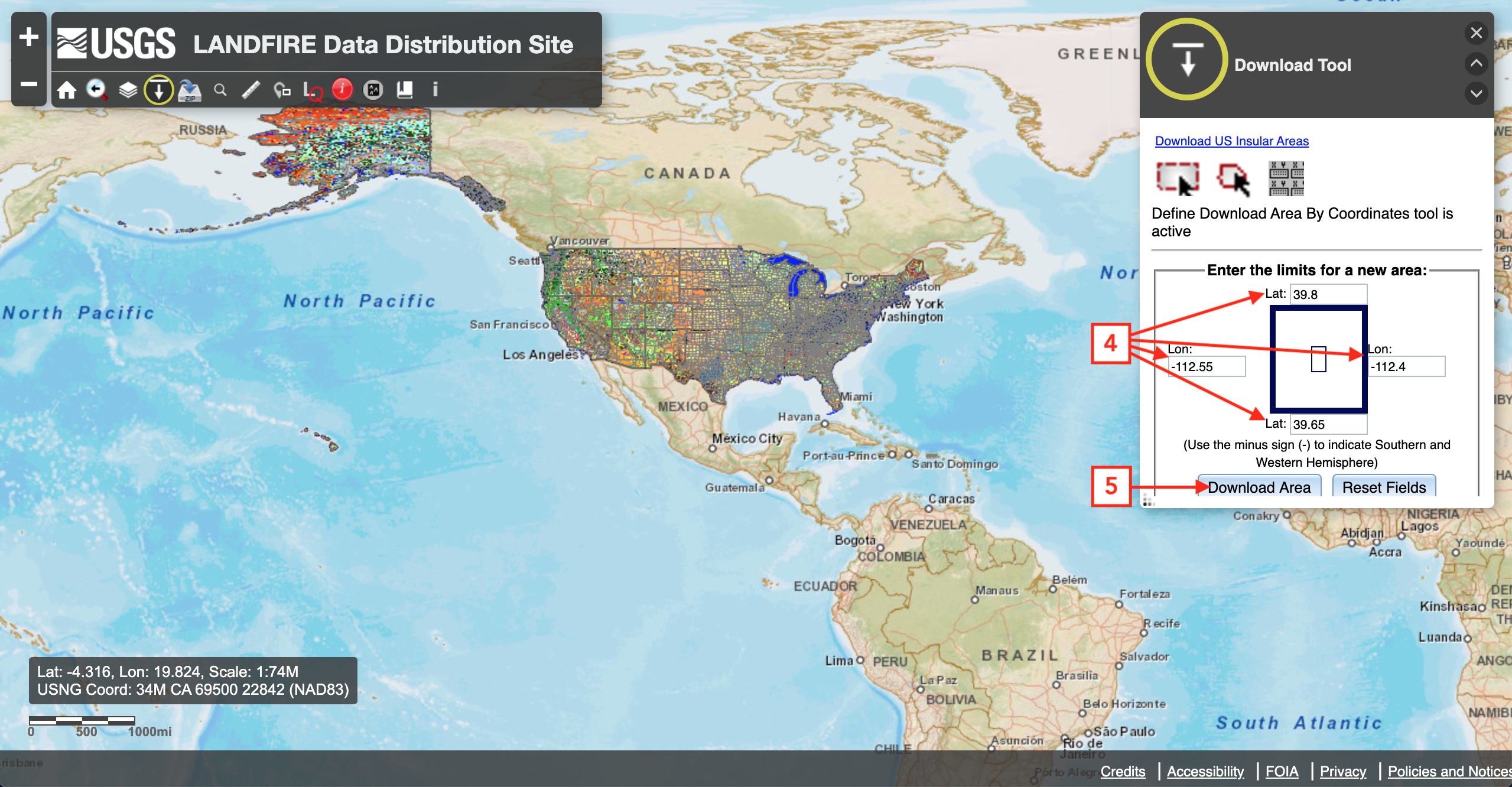

This will open a new window on the right with a form that lets you key in the longitude and latitude range of your selection. In this window, we will input the coordinates computed earlier [4], and below we will click the Download Area button [5].

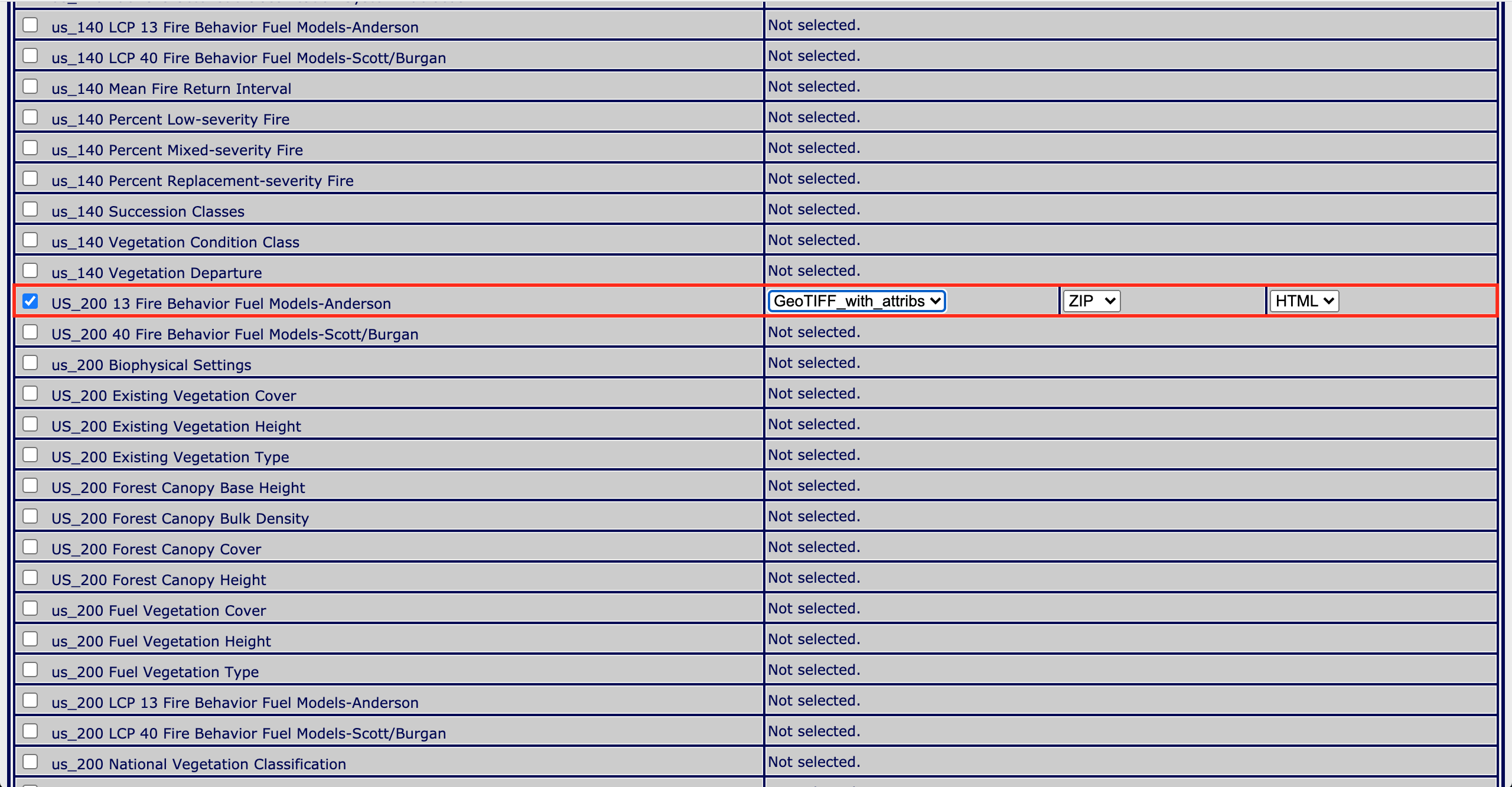

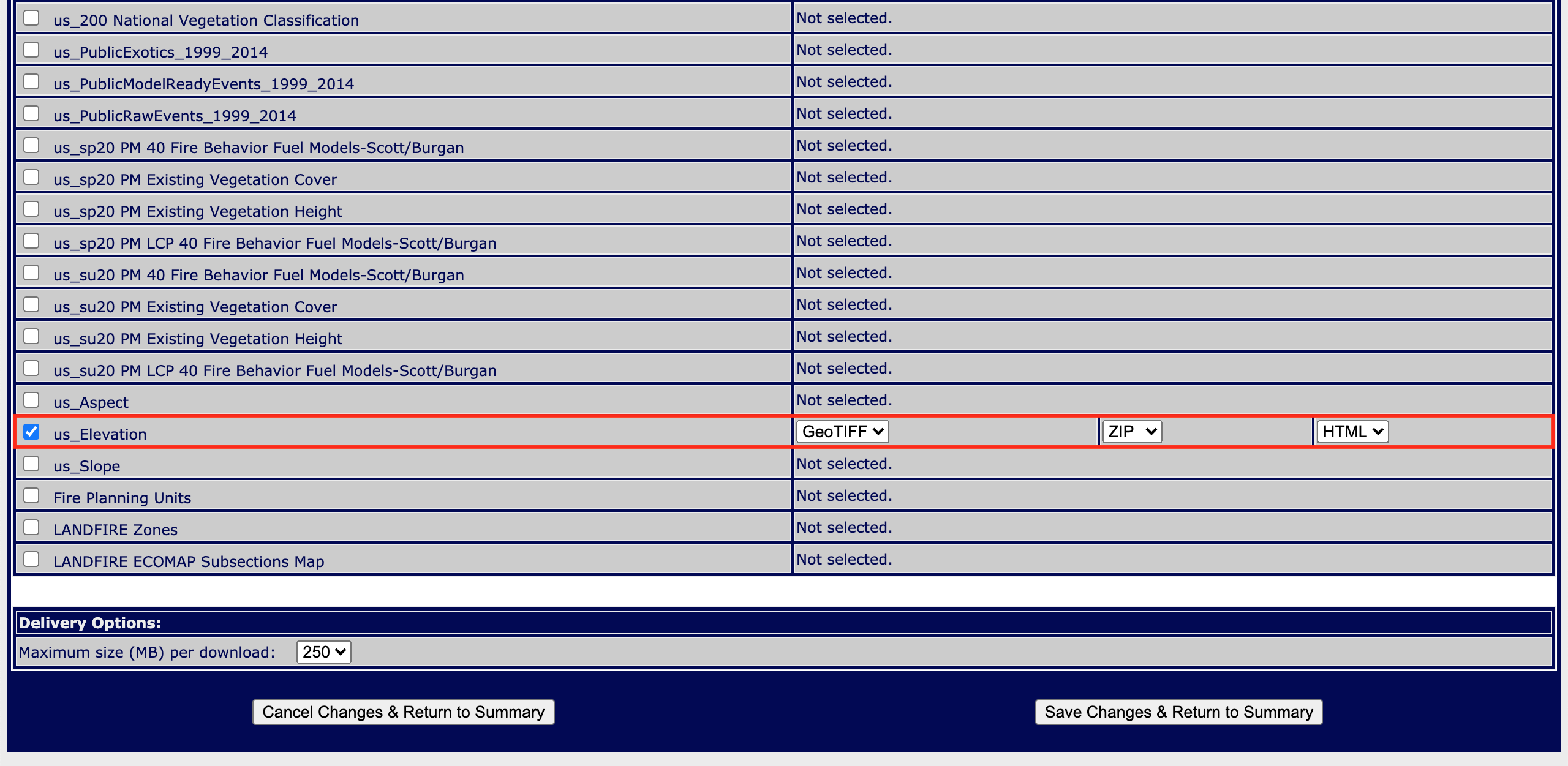

In the next window, click on the Modify button [6]. This will open a new window listing all of the available data products for the selected region. Make sure only the box next to US_200 13 Fire Behavior Fuel Models-Anderson is checked and change the data format from ArcGRID_with_attribs to GeoTIFF_with _attribs. At the bottom make sure Maximum size (MB) per piece: is set to 250. Then go to the bottom of the page and click Save Changes & Return to Summary.

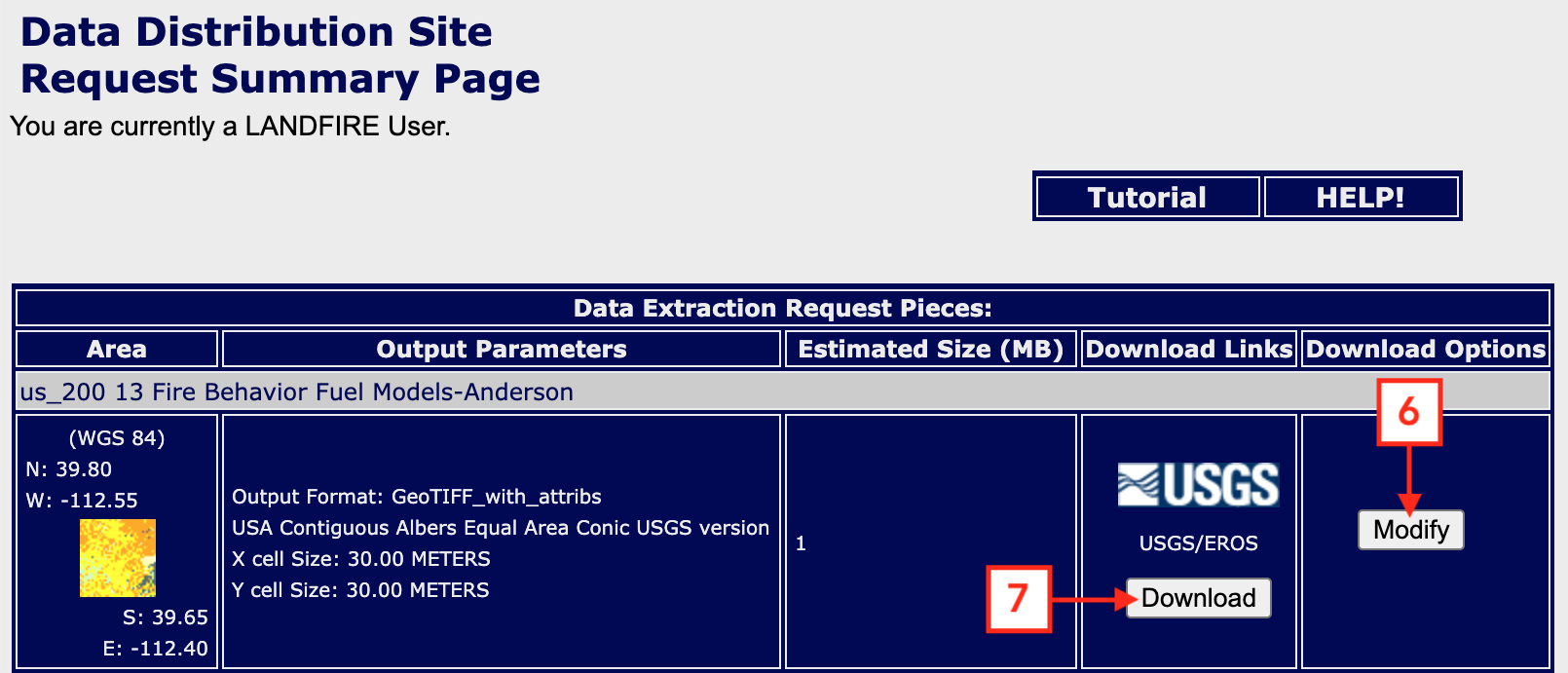

Finally, click on the Download button [7]. The file will be a compressed archive containing, among others, a GeoTIFF file. The name of the file will be different for each request, but in this example we have lf45409014_US_200FBFM13.zip containing the GeoTIFF file US_200FBFM13.tif, which can be found File:US 200FBFM13.tif or US_200FBFM13.tif.

Downloading high resolution elevation data

For the United States, elevation data is also available at the Landfire website. Repeat the steps described above for downloading the fuel data, but selecting instead Topographic and us_Elevation.

Again, we key in the coordinates determined before and click the Download Area button.

In the next window click again Modify, make sure only us_Elevation is selected, change the format to Geotiff and click Save Changes & Return to Summary

In the next window, you should be able to click Download in order to download the GeoTIFF file containing topography. You will obtain the zip file lf34682161_US_DEM2016.zip containing a GeoTIFF file that can be downloaded from File:US DEM2016.tif or US_DEM2016.tif.

Converting fire data

This section describes converting data from geotiff to geogrid format.

In order for geogrid to be able to read this data, we need to convert it into an intermediate format. We will be using a utility program included on wrfxpy repository. For information on how to obtain and use this tool, see How to convert data for Geogrid. We will go to the wrfxpy installation already obtained and move the geotiff files inside the directory.

To convert the fuel and elevation data, we will run

./convert_geotiff.sh US_200FBFM13.tif geo_data NFUEL_CAT ./convert_geotiff.sh US_DEM2016.tif geo_data ZSF

The resulting geo_data/NFUEL_CAT/index file is created as follows.

projection = albers_nad83 dx = 30.0 dy = -30.0 truelat1 = 29.5 truelat2 = 45.5 stdlon = -96.0 known_x = 258.0 known_y = 313.0 known_lon = -112.47513542444187 known_lat = 39.725087912688274 row_order = top_bottom description = "Anderson 13 fire behavior categories" units = "fuel category" type = categorical signed = yes category_min = 0 category_max = 14 scale_factor = 1.0 wordsize = 2 tile_x = 515 tile_y = 625 tile_z = 1 endian = little

We have chosen to set the word size to 1 byte because it can represent 256 categories, plenty for this purpose. Notice that the program has changed the number of categories to 14 and uses the last category to indicate that the source data was out of the range 1-13. For the fuel category data, this represents that there is no fuel present, due to a lake, river, road, etc.

We can check that the projection information entered into the index file is correct, by running the gdalinfo binary that is installed with GDAL. In this case, gdalinfo tells us that the source file contains the following projection parameters.

Driver: GTiff/GeoTIFF

Files: US_200FBFM13.tif

Size is 515, 625

Coordinate System is:

PROJCS["USA_Contiguous_Albers_Equal_Area_Conic_USGS_version",

GEOGCS["NAD83",

DATUM["North_American_Datum_1983",

SPHEROID["GRS 1980",6378137,298.2572221010042,

AUTHORITY["EPSG","7019"]],

AUTHORITY["EPSG","6269"]],

PRIMEM["Greenwich",0],

UNIT["degree",0.0174532925199433],

AUTHORITY["EPSG","4269"]],

PROJECTION["Albers_Conic_Equal_Area"],

PARAMETER["standard_parallel_1",29.5],

PARAMETER["standard_parallel_2",45.5],

PARAMETER["latitude_of_center",23],

PARAMETER["longitude_of_center",-96],

PARAMETER["false_easting",0],

PARAMETER["false_northing",0],

UNIT["metre",1,

AUTHORITY["EPSG","9001"]]]

Origin = (-1400235.000000000000000,1986555.000000000000000)

Pixel Size = (30.000000000000000,-30.000000000000000)

Metadata:

AREA_OR_POINT=Area

DataType=Thematic

Image Structure Metadata:

INTERLEAVE=BAND

Corner Coordinates:

Upper Left (-1400235.000, 1986555.000) (112d35' 1.88"W, 39d47'44.01"N)

Lower Left (-1400235.000, 1967805.000) (112d32'44.10"W, 39d37'50.78"N)

Upper Right (-1384785.000, 1986555.000) (112d24'16.21"W, 39d49' 9.72"N)

Lower Right (-1384785.000, 1967805.000) (112d21'59.86"W, 39d39'16.30"N)

Center (-1392510.000, 1977180.000) (112d28'30.49"W, 39d43'30.32"N)

Band 1 Block=128x128 Type=Int16, ColorInterp=Gray

NoData Value=-9999

Metadata:

RepresentationType=THEMATIC

The resulting geo_data/ZSF/index file is created as follows.

projection = albers_nad83 dx = 30.0 dy = -30.0 truelat1 = 29.5 truelat2 = 45.5 stdlon = -96.0 known_x = 258.0 known_y = 313.0 known_lon = -112.47513542444187 known_lat = 39.725087912688274 row_order = top_bottom description = "National Elevation Dataset 1/3 arcsecond resolution" units = "meters" type = continuous signed = yes scale_factor = 1.0 wordsize = 2 tile_x = 515 tile_y = 625 tile_z = 1 endian = little

Here we have used word size of 2 bytes and a scale factor of 1.0, which can represent any elevation in the world with 1-meter accuracy, which is approximately the accuracy of the source data.

Again, we compare the projection parameters in the index file with that reported by gdalinfo and find that the conversion was correct.

Driver: GTiff/GeoTIFF

Files: US_DEM2016.tif

Size is 515, 625

Coordinate System is:

PROJCS["USA_Contiguous_Albers_Equal_Area_Conic_USGS_version",

GEOGCS["NAD83",

DATUM["North_American_Datum_1983",

SPHEROID["GRS 1980",6378137,298.2572221010042,

AUTHORITY["EPSG","7019"]],

AUTHORITY["EPSG","6269"]],

PRIMEM["Greenwich",0],

UNIT["degree",0.0174532925199433],

AUTHORITY["EPSG","4269"]],

PROJECTION["Albers_Conic_Equal_Area"],

PARAMETER["standard_parallel_1",29.5],

PARAMETER["standard_parallel_2",45.5],

PARAMETER["latitude_of_center",23],

PARAMETER["longitude_of_center",-96],

PARAMETER["false_easting",0],

PARAMETER["false_northing",0],

UNIT["metre",1,

AUTHORITY["EPSG","9001"]]]

Origin = (-1400235.000000000000000,1986555.000000000000000)

Pixel Size = (30.000000000000000,-30.000000000000000)

Metadata:

AREA_OR_POINT=Area

DataType=Thematic

Image Structure Metadata:

INTERLEAVE=BAND

Corner Coordinates:

Upper Left (-1400235.000, 1986555.000) (112d35' 1.88"W, 39d47'44.01"N)

Lower Left (-1400235.000, 1967805.000) (112d32'44.10"W, 39d37'50.78"N)

Upper Right (-1384785.000, 1986555.000) (112d24'16.21"W, 39d49' 9.72"N)

Lower Right (-1384785.000, 1967805.000) (112d21'59.86"W, 39d39'16.30"N)

Center (-1392510.000, 1977180.000) (112d28'30.49"W, 39d43'30.32"N)

Band 1 Block=128x128 Type=Int16, ColorInterp=Gray

NoData Value=-9999

Metadata:

RepresentationType=THEMATIC

Finally, the converted data can be found here geo_data.tar.gz.

Running geogrid

The geogrid binary will create a NetCDF file called geo_em.d01.nc. This file will contain all of the static data necessary to run your simulation. Before we can run the binary, however, we must tell geogrid what data needs to be in these files, where it can find them, and what kind of preprocessing we want to be done. This information is contained in a run-time configuration file called GEOGRID.TBL, which is located in the geogrid subdirectory. The file that is released with WPS contains reasonable defaults for the variables defined on the atmospheric grid, but we need to add two additional sections for the two fire grid data sets that we have just created. We will append the geo_data/GEOGRID.TBL sections to the file geogrid/GEOGRID.TBL.

===============================

name = NFUEL_CAT

dest_type = categorical

interp_option = default:nearest_neighbor+average_16pt+search

abs_path = /absolute/path/to/geo_data/NFUEL_CAT

priority = 1

fill_missing = 14

subgrid = yes

dominant_only = NFUEL_CAT

z_dim_name = fuel_cat

halt_on_missing = no

===============================

name = ZSF

dest_type = continuous

interp_option = default:average_gcell(4.0)+four_pt+average_4pt

abs_path = /absolute/path/to/geo_data/ZSF

priority = 1

fill_missing = 0

smooth_option = smth-desmth_special; smooth_passes=1

subgrid = yes

df_dx = DZDXF

df_dy = DZDYF

halt_on_missing = no

===============================

For NFUEL_CAT, we will use simple nearest-neighbor interpolation, while for ZSF, we will use bilinear interpolation with smoothing. Other configurations are possible. See the WPS users guide for further information. The full table used can be found pastebin or GEOGRID.TBL.

Once we make these changes to the GEOGRID.TBL file, and ensure that all of the directories are in the correct place (including the default geogrid dataset at ../../WPS_GEOG), we can execute the geogrid binary.

./geogrid.exe

This will create a file called geo_em.d01.nc in the current directory, which can be found here, geogrid_output.tar.gz. The contents of this file can be viewed using your favorite NetCDF viewer.

- geo_em.d01.nc

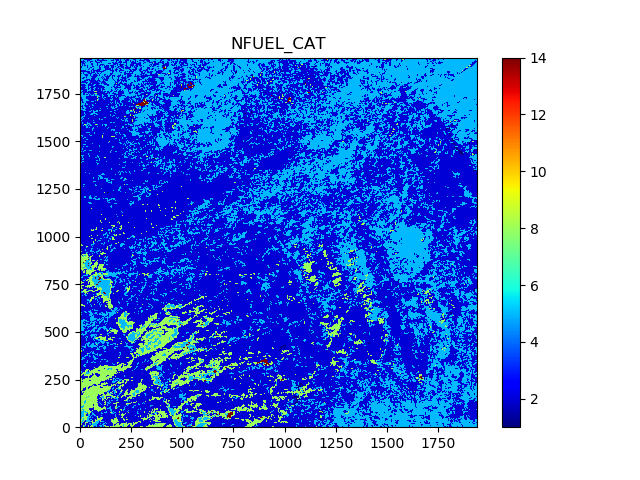

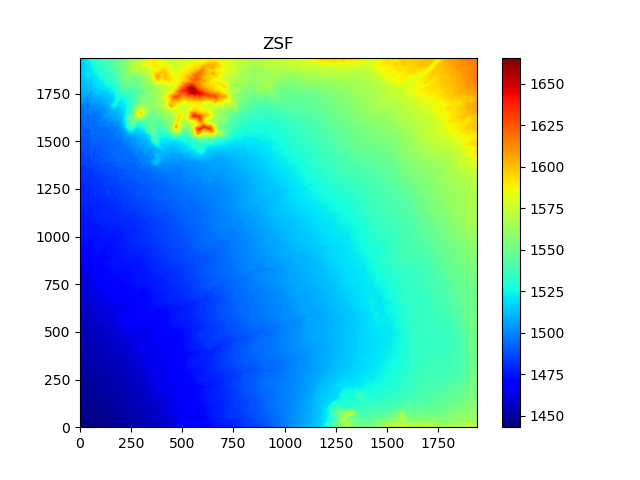

Here, we have visualized the fire grid variables, NFUEL_CAT and ZSF, as well as the variable HGT_M, which is the elevation data used by the atmospheric model. We can compare ZSF and HGT_M to verify that our data conversion process worked. The colormaps of these two pictures have been aligned, so that we can make a quick visual check. As we see, the two images do have a similar structure and magnitude, but they do seem to suffer some misalignment. Given that the data came from two different sources, in two different projections, the error is relatively minor. Because WPS converts between projections in single precision, by default, there is likely a significant issue with floating point error. We may, in the future, consider making some changes so that this conversion is done in double precision.

Obtaining atmospheric data

There are a number of datasets available to initialize a WRF real run. The WRF users page lists a few. One challenge in running a fire simulation is finding a dataset of sufficient resolution. One (relatively) high resolution data source is the Climate Forecast System (CFS). This is still only 56 km resolution, so no small scale weather patterns will appear in our simulation. In general, we will want to run a series of nested domains in order to catch some small scale weather features; however, we will proceed with a single domain example.

The CFSR datasets are available at the following website, https://www.ncei.noaa.gov/data/climate-forecast-system/access/operational-analysis. We will browse to the pressure and surface directory containing the data for September 08, 2018. Our simulation runs from the hours 00-06 on this day, so we will download the pressure grib files for hours 00 and 06, and the surface grib files for hours 00 and 06.

You can get these files also from here, CFSR_20180908_00-06.tar.gz.

Running ungrib

With the grib files downloaded, we need to process them separately for pressure and surface variables. We need to link the pressure GRIB files into the WPS directory using the script link_grib.csh. This script takes as arguments all of the grib files that are needed for the simulation. In this case, we can run the following command in the WPS directory.

./link_grib.csh <path to>/CFSR_20180908_00-06/pressure/*.grib2

Substitute <path to> with the directory in which you have saved the grib files. This command creates a series of symbolic links with a predetermined naming sequence to all of the grib files you pass as arguments. You should now have two new soft links named GRIBFILE.AAA and GRIBFILE.AAB.

With the proper links in place, we need to tell ungrib what they contain. This is done by copying a variable table into the main WPS directory. Several variable tables are distributed with WPS which describe common datasets. You can find these in the directory WPS/ungrib/Variable_Tables. In particular, the file which corresponds to the CFSR grib files is called Vtable.CFSR, so we issue the following command to copy it into the current directory.

cp ungrib/Variable_Tables/Vtable.CFSR Vtable

We are now ready to run the ungrib executable.

./ungrib.exe

This will create two files in the current directory named COLMET:2018-09-08_00 and COLMET:2018-09-08_06. We need to change their name before processing surface variables. So

mv COLMET:2018-09-08_00 COLMET_P:2018-09-08_00 mv COLMET:2018-09-08_06 COLMET_P:2018-09-08_06

and remove the GRIBFILE.* files doing

rm GRIBFILE.*

Now we can start over for processing surface variables

./link_grib.csh <path to>/CFSR_20180908_00-06/surface/*.grib2

Substitute <path to> with the directory in which you have saved the grib files. You should now have two new soft links named GRIBFILE.AAA and GRIBFILE.AAB. We are now ready to run the ungrib executable again.

./ungrib.exe

This will create two files in the current directory named COLMET:2018-09-08_00 and COLMET:2018-09-08_06. We need to change their name. So

mv COLMET:2018-09-08_00 COLMET_S:2018-09-08_00 mv COLMET:2018-09-08_06 COLMET_S:2018-09-08_06

The four files COLMET_P:2018-09-08_00, COLMET_P:2018-09-08_06, COLMET_S:2018-09-08_00, and COLMET_S:2018-09-08_06 are the resulting files which can be downloaded here, ungrib_output.tar.gz.

Running metgrid

Metgrid will take the files created by ungrib and geogrid and combine them into a set of files. At this point, all we need to do is run it.

./metgrid.exe

This creates two files named met_em.d01.2018-09-08_00:00:00.nc and met_em.d01.2018-09-08_06:00:00.nc, which you can download here, metgrid_output.tar.gz.

Running WRF-SFIRE

We are now finished with all steps involving WPS. All we need to do is copy over the metgrid output files over to our WRF real run directory at WRF-SFIRE/test/em_real and configure our WRF namelist. We will need to be sure that the domain description in namelist.input matches that of the namelist.wps we created previously, otherwise WRF will refuse to run. Pay particular attention to the start/stop times and the grid sizes. The fire ignition parameters are configured in the same way as for the ideal case. Relevant portion of the namelist we will use are given below.

&time_control run_days = 0 run_hours = 6 run_minutes = 0 run_seconds = 0 start_year = 2018 start_month = 9 start_day = 8 start_hour = 0 start_minute = 0 start_second = 0 end_year = 2018 end_month = 9 end_day = 8 end_hour = 6 end_minute = 0 end_second = 0 interval_seconds = 21600 input_from_file = .true. history_interval = 30 frames_per_outfile = 1000 restart = .false. restart_interval = 180 io_form_history = 2 io_form_restart = 2 io_form_input = 2 io_form_boundary = 2 debug_level = 1 / &domains time_step = 0 time_step_fract_num = 1 time_step_fract_den = 2 max_dom = 1 s_we = 1 e_we = 97 s_sn = 1 e_sn = 97 s_vert = 1 e_vert = 41 num_metgrid_levels = 38 num_metgrid_soil_levels = 4 dx = 100 dy = 100 grid_id = 1 parent_id = 1 i_parent_start = 1 j_parent_start = 1 parent_grid_ratio = 1 parent_time_step_ratio = 1 feedback = 1 smooth_option = 0 sr_x = 20 sr_y = 20 sfcp_to_sfcp = .true. p_top_requested = 10000 / &bdy_control spec_bdy_width = 5 spec_zone = 1 relax_zone = 4 specified = .true. periodic_x = .false. symmetric_xs = .false. symmetric_xe = .false. open_xs = .false. open_xe = .false. periodic_y = .false. symmetric_ys = .false. symmetric_ye = .false. open_ys = .false. open_ye = .false. nested = .false. /

It is worth mentioning the different ifire options implemented:

- ifire = 1: WRF-SFIRE code up to date

- ifire = 2: Fire code from 2012 in WRF with changes at NCAR

Visit README-SFIRE.md for more details.

The full namelist used can be found pastebin or namelist.input.

Once the namelist is properly configured we run the WRF real preprocessor.

./real.exe

This creates the initial and boundary files for the WRF simulation and fills all missing fields from the grib data with reasonable defaults. The files that it produces are wrfbdy_d01 and wrfinput_d01, which can be downloaded here, wrf_real_output.tar.gz.

To prepare for running the fire model, copy its parameters here:

cp ../em_fire/hill/namelist.fire . cp ../em_fire/hill/namelist.fire_emissions .

Finally, we run the simulation.

./wrf.exe

The history file for this example can be downloaded here, wrf_real_history.tar.gz.